The source code of the hands-on exercises are available at the following link: https://github.com/tomomano/learn-aws-by-coding

🌎Japanese version is available here🌎

1. Introduction

1.1. Purpose and content of this book

This book was prepared as a lecture material for "Special Lectures on Information Physics and Computing", which was offered in the S1/S2 term of the 2021 academic year at the Department of Mathematical Engineering and Information Physics, the University of Tokyo.

The purpose of this book is to explain the basic knowledge and concepts of cloud computing for beginners. It provides hands-on tutorials to use real cloud environment provided by Amazon Web Services (AWS).

We assume that the readers would be students majoring science or engineering at college, or software engineers who are starting to develop cloud applications. We will introduce pracitcal steps to use the cloud for research and web application development. We plan to keep this course as interactive and practical as possible, and for that purpose, less emphasis is placed on the theories and knowledge, and more effort is placed on writing real programs. I hope that this book serves as a stepping stone for readers to use cloud computing in their future research and applications.

The book is divided into three parts:

| Theme | Hands-on | |

|---|---|---|

1st Part (Section 1 to 4) |

Cloud Fundamentals |

|

2nd Part (Section 5 to 9) |

Machine Learning using Cloud |

|

3rd Part (Section 10 to 13) |

Introduction to Serverless Architecture |

|

In the first part, we explain the basic concepts and knowledge of cloud computing. Essential ideas necessary to safely and cleverly use cloud will be covered, including security and networking. In the hands-on session, we will practice setting up a simple virtual server on AWS using AWS API and AWS CDK.

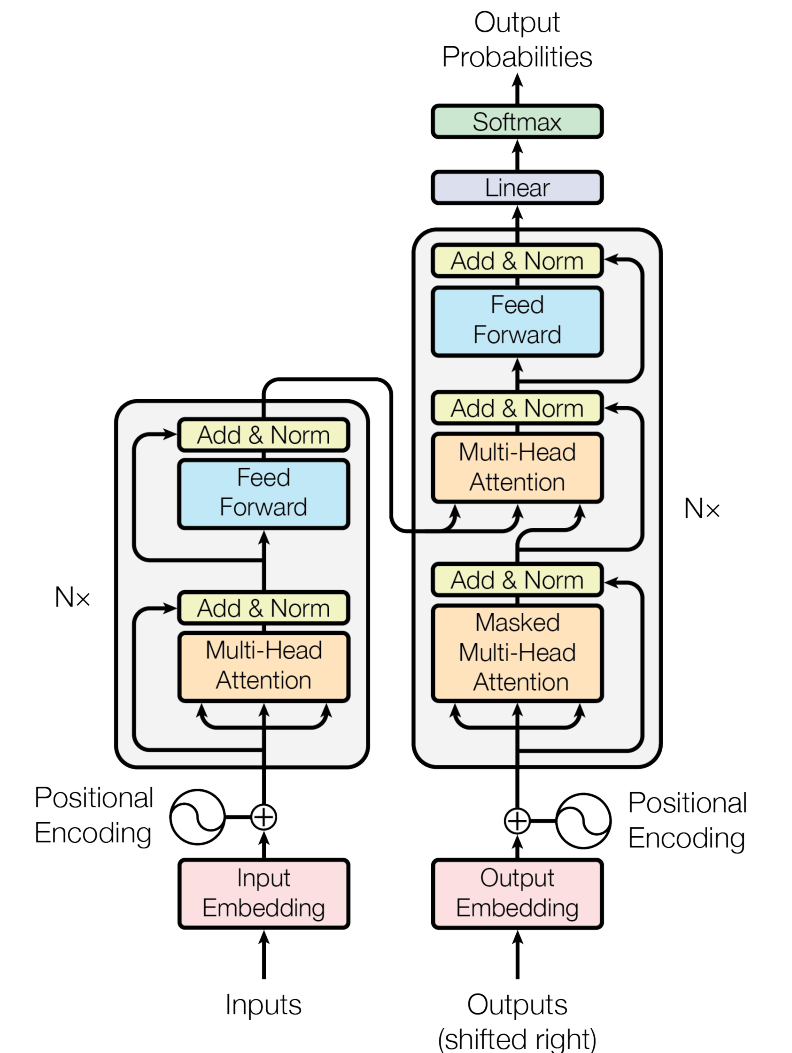

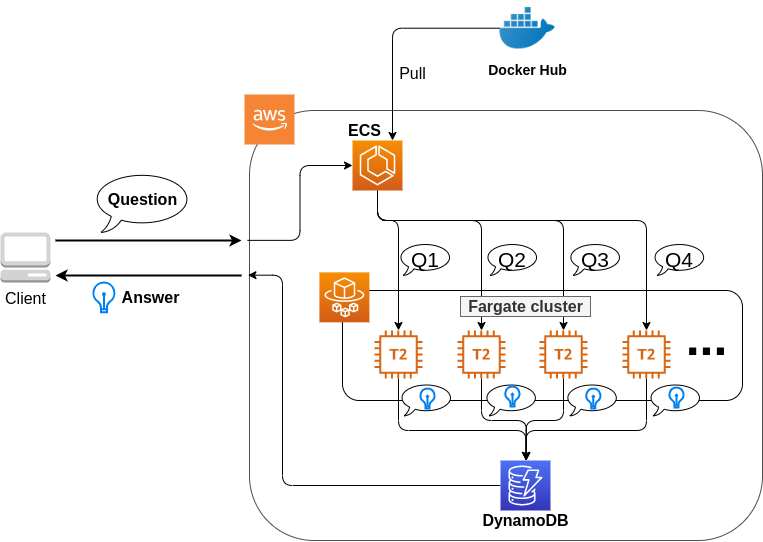

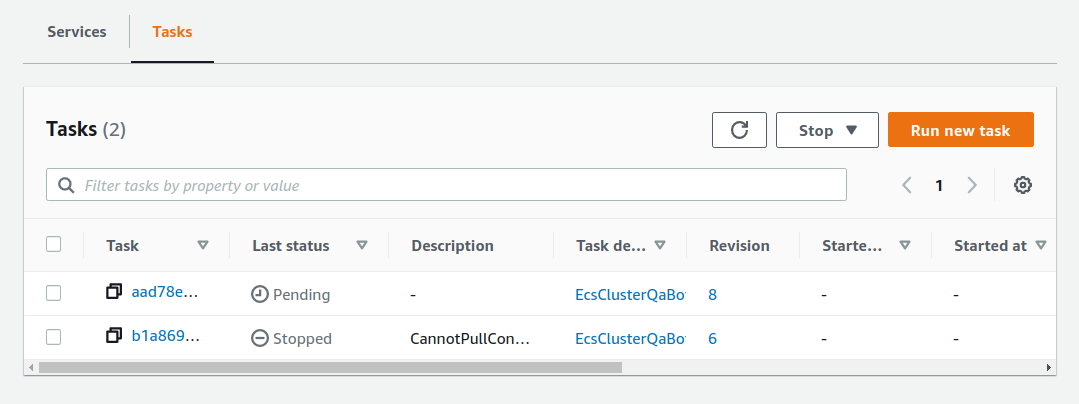

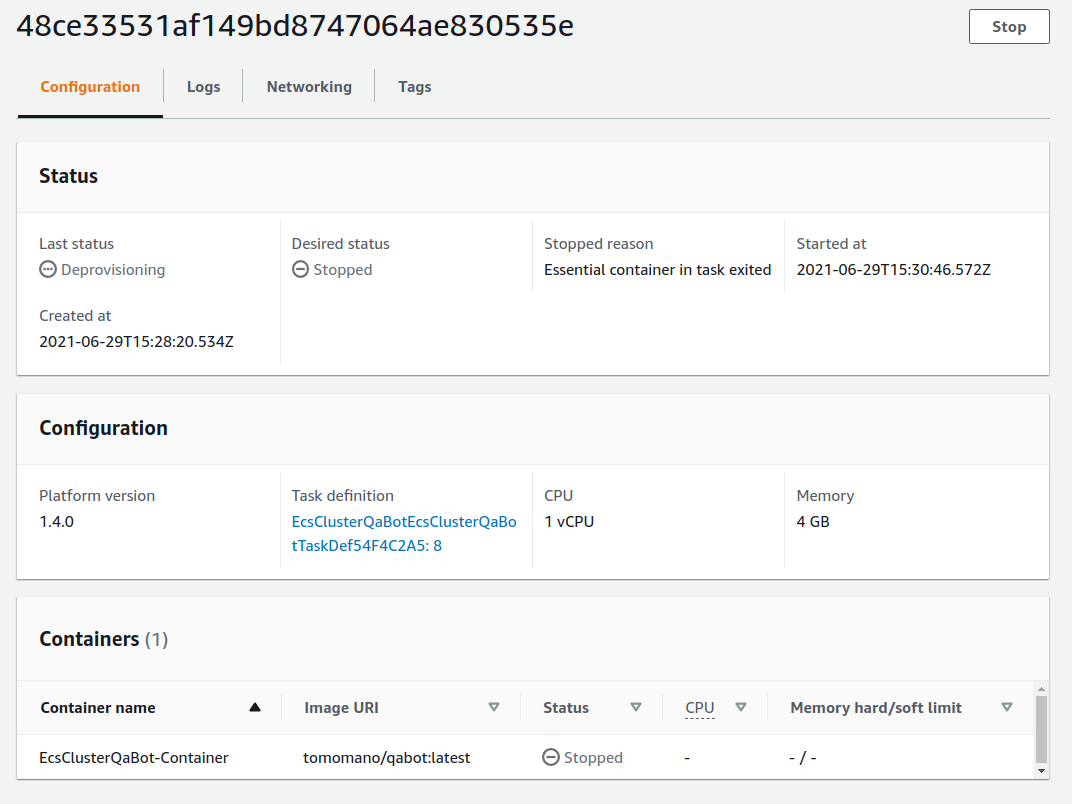

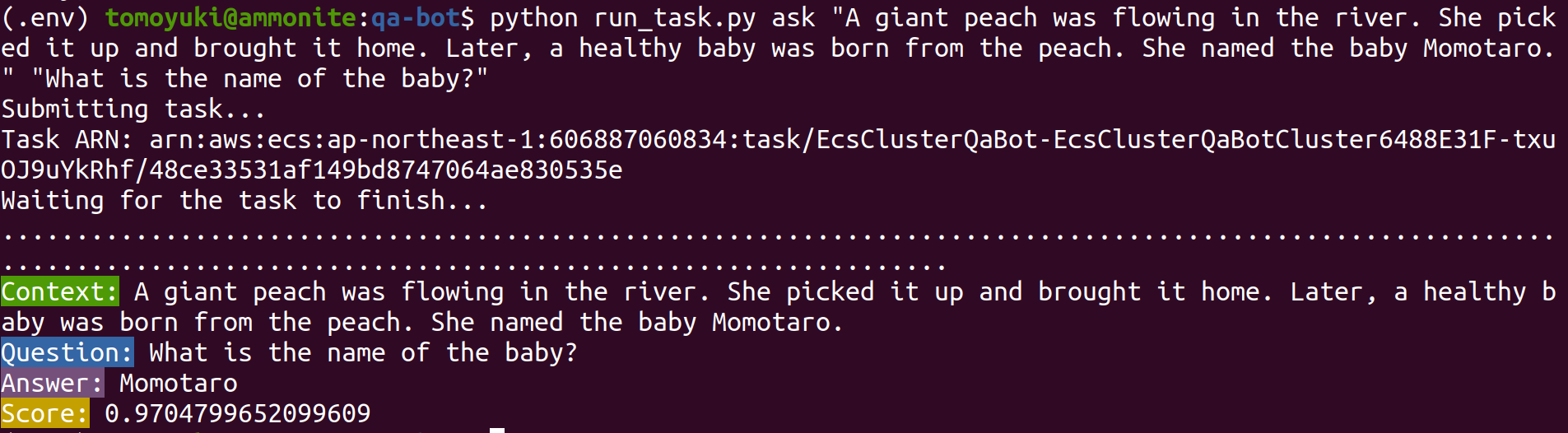

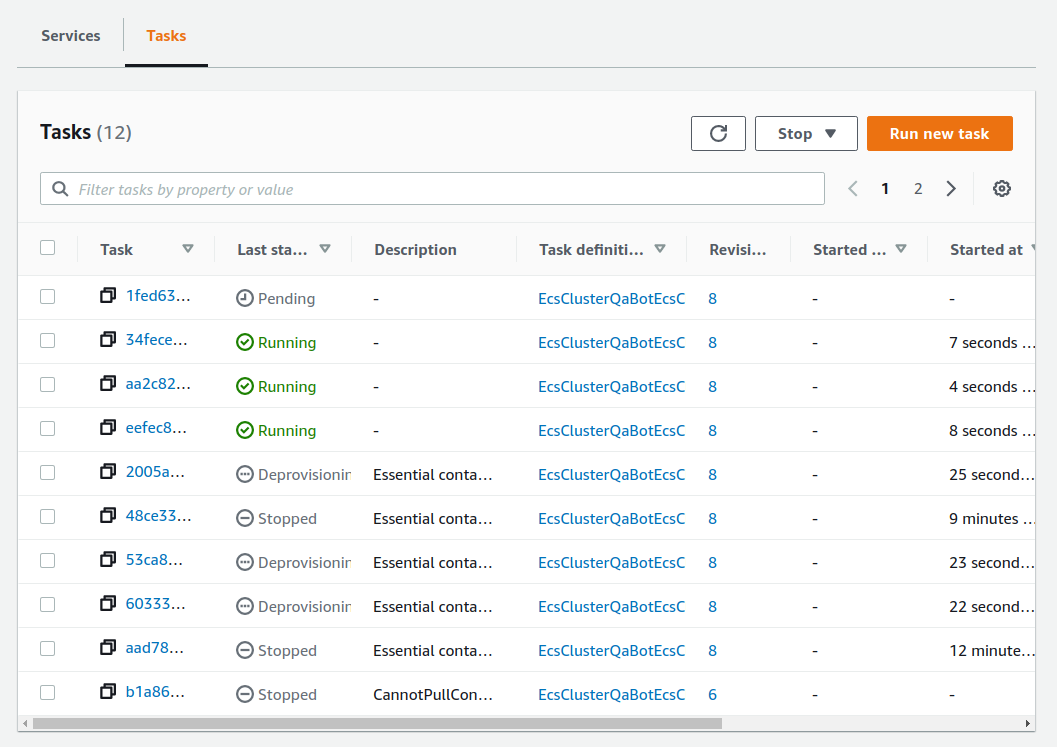

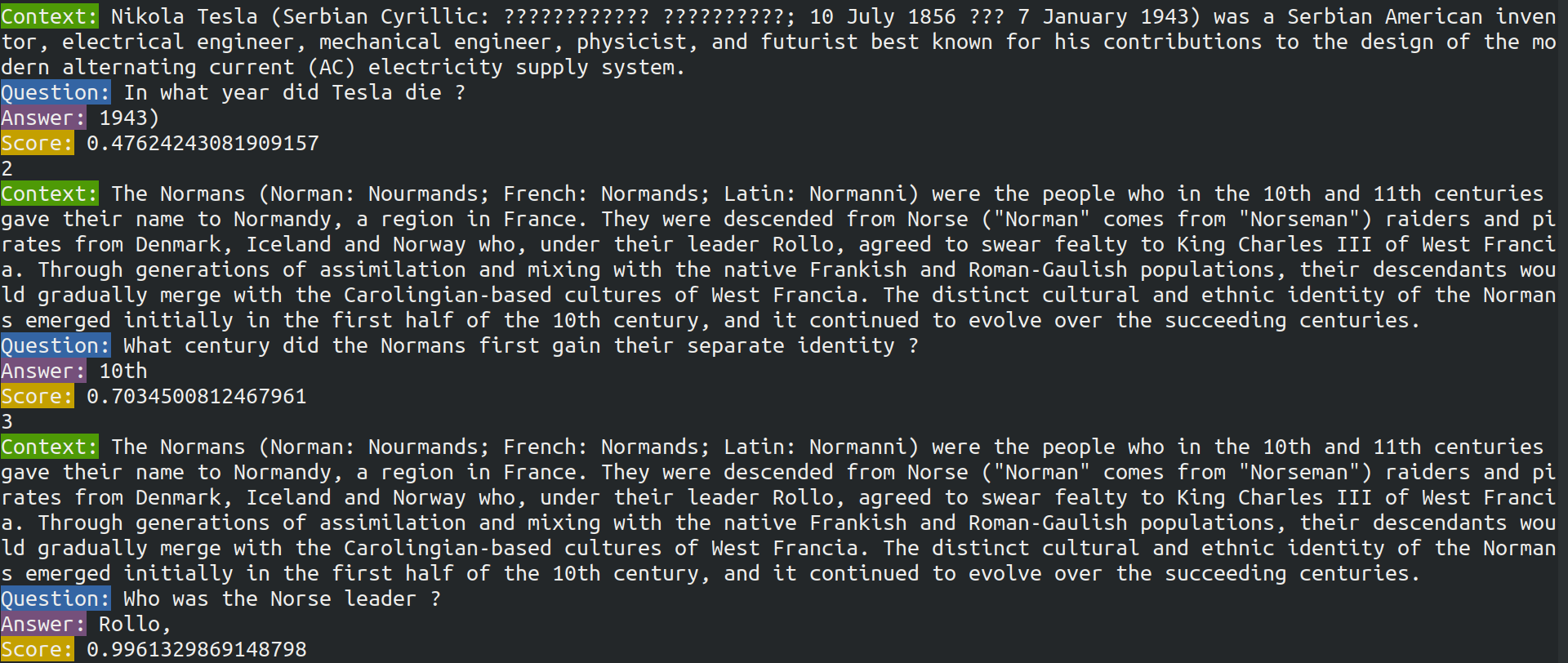

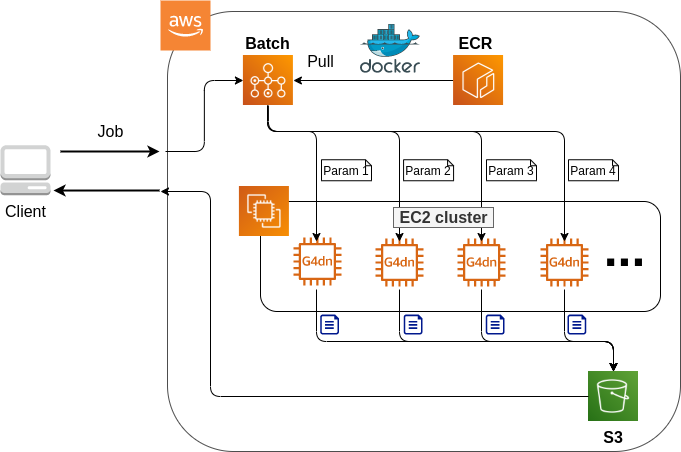

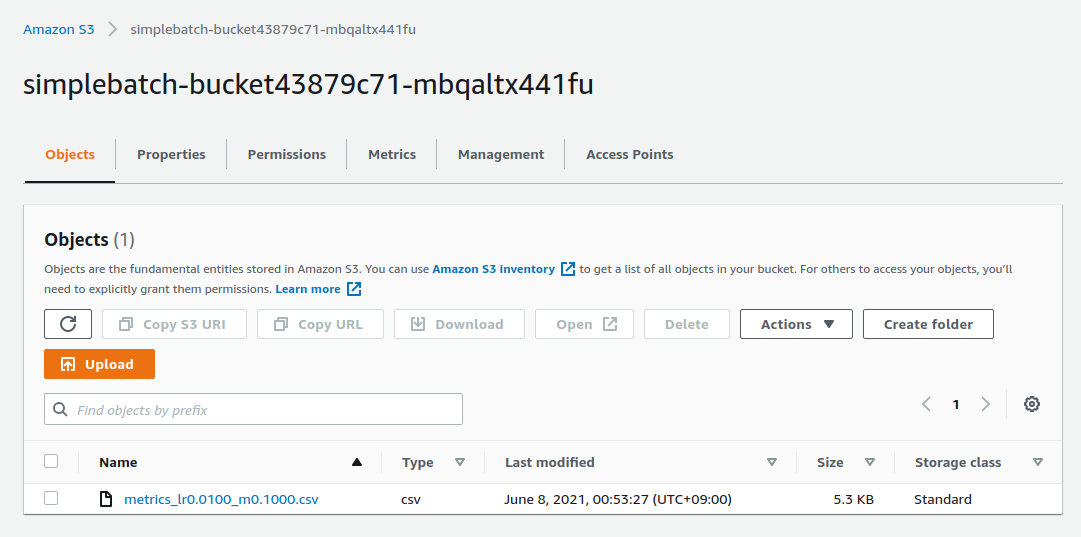

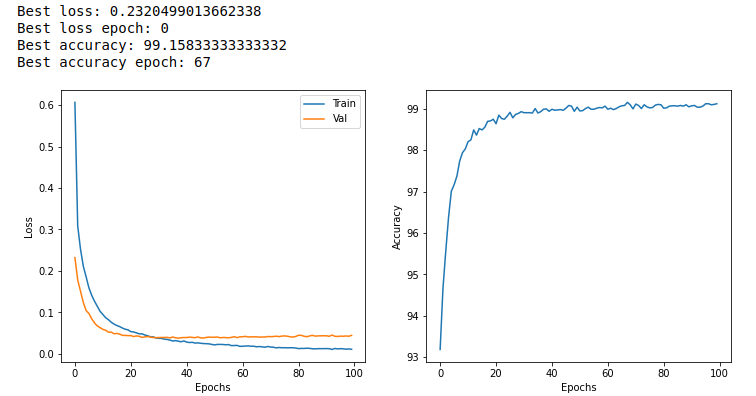

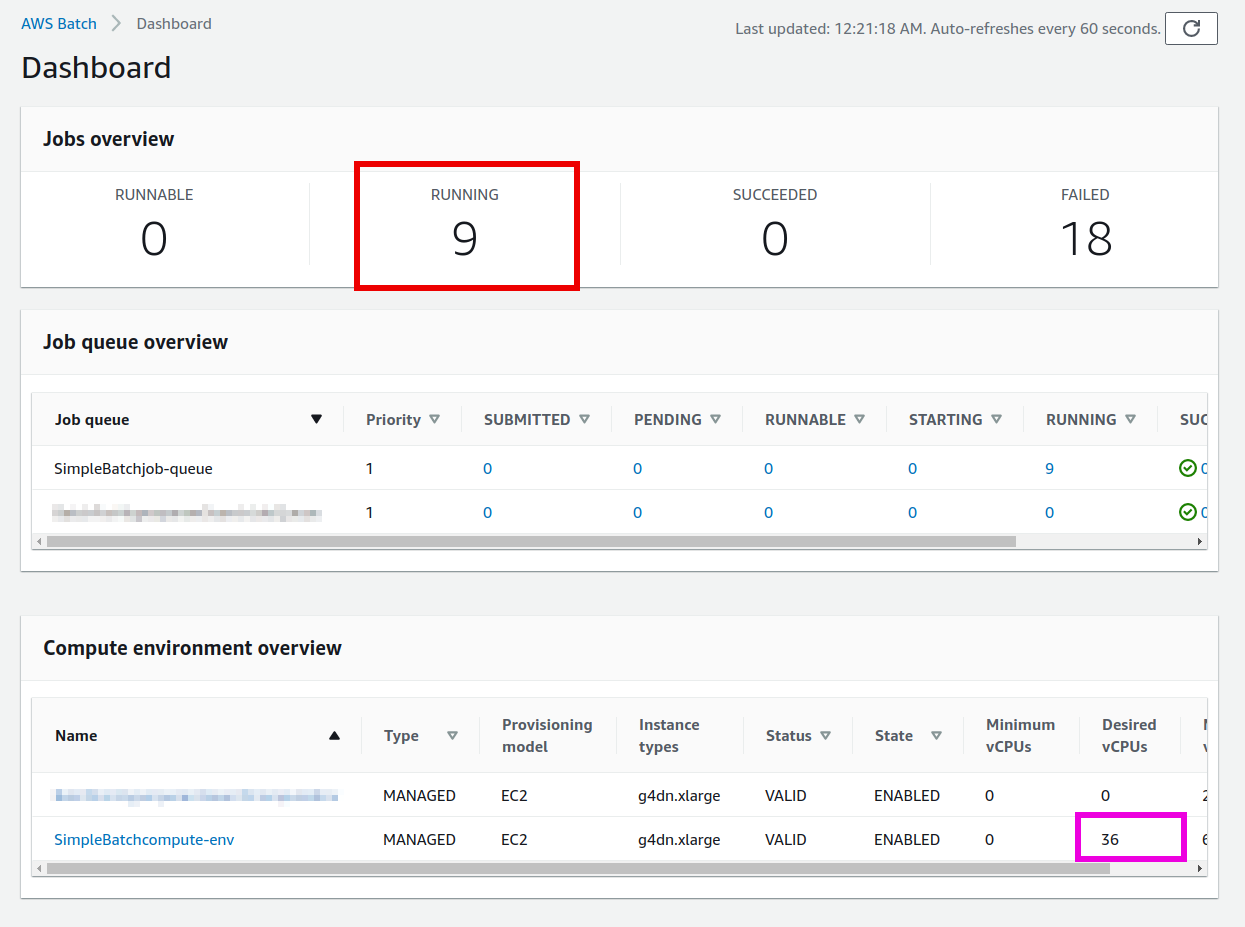

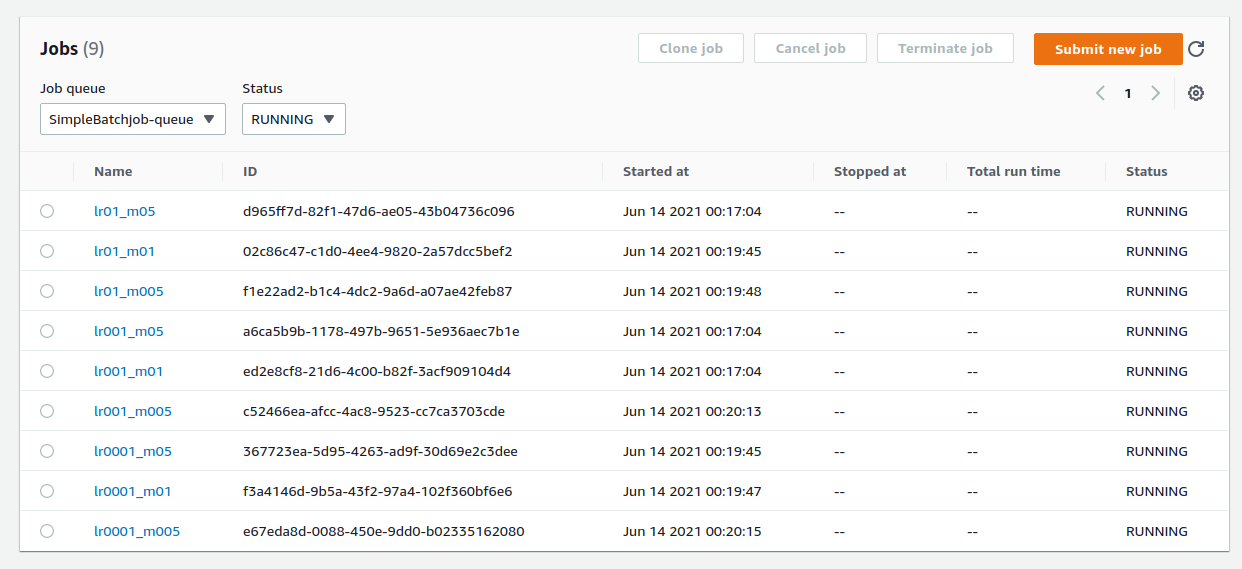

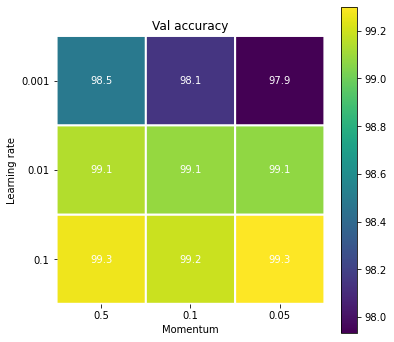

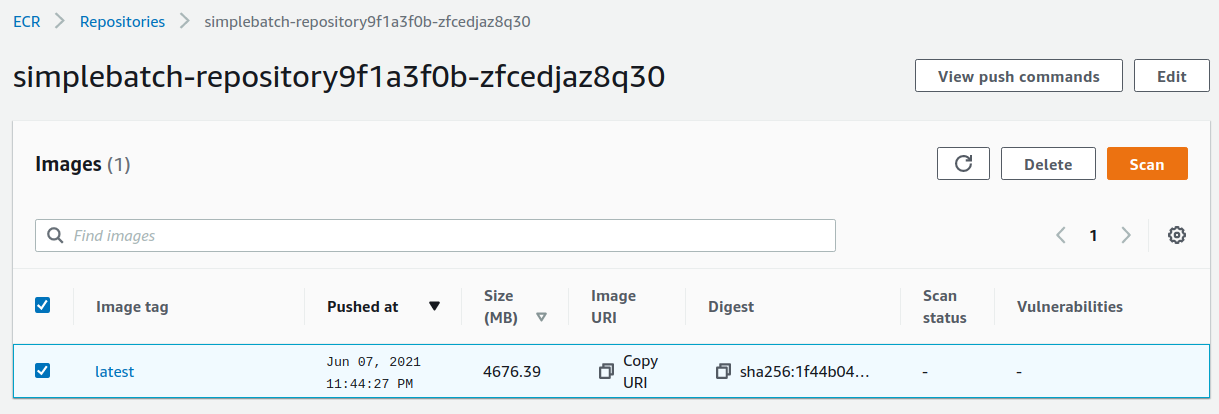

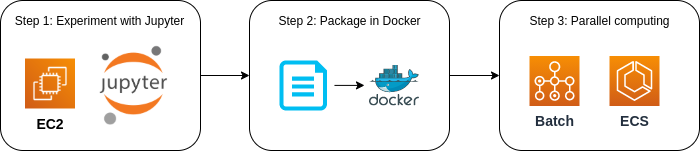

In the second part, we introduce the cocenpts and techniques for running scientific computing (especially machine learning) in the cloud. In parallel, we will learn a modern virtual coumputing environment called Docker. In the first hands-on session, we will run Jupyter Notebook in the AWS cloud and run a simple machine learning program. In the second hands-on, we will create a bot that automatically generates answers to questions using natural language model powered by deep neural network. In the third hands-on, we will show how to launch a cluster with multiple GPU instances and perform massively parallel hyperparameter search for deep learning.

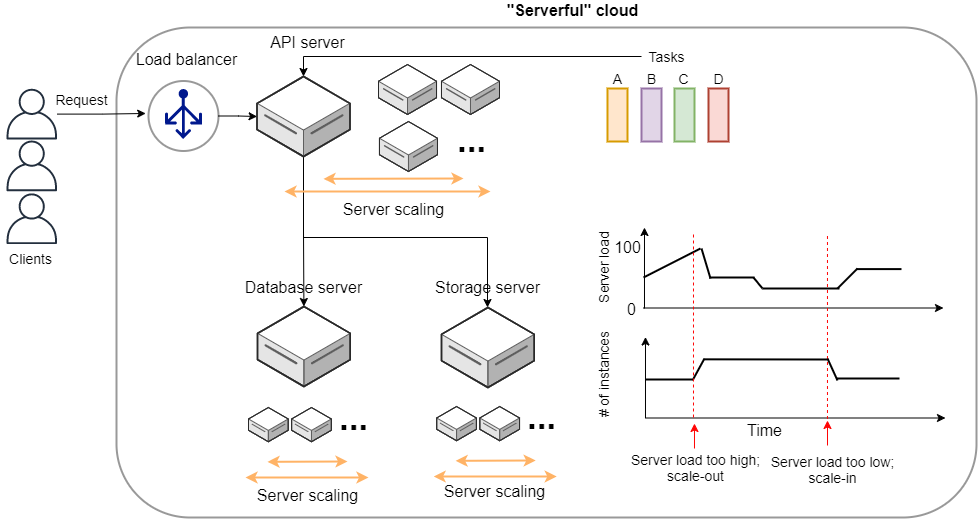

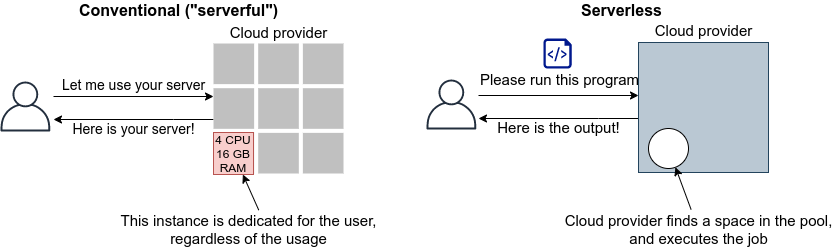

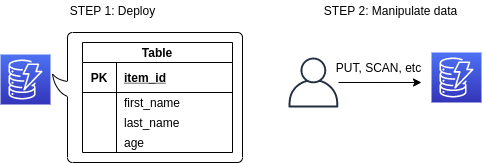

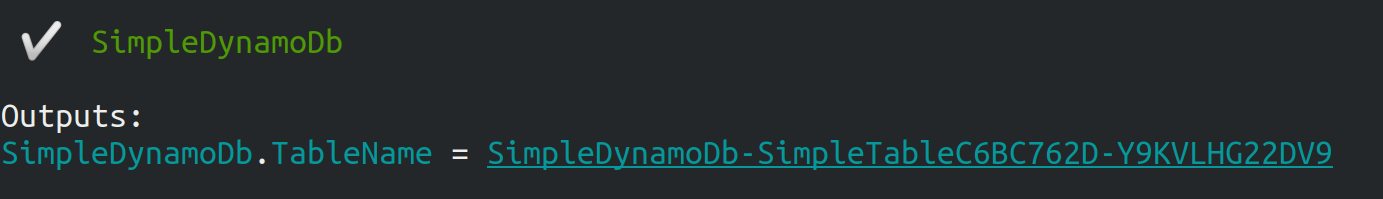

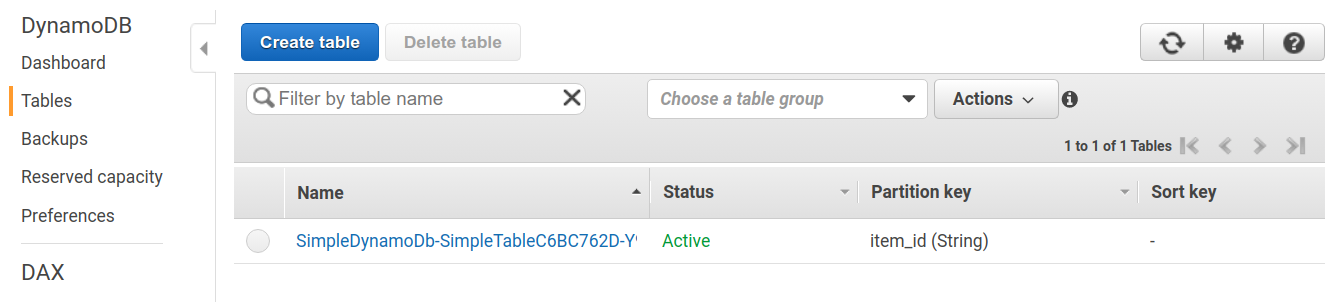

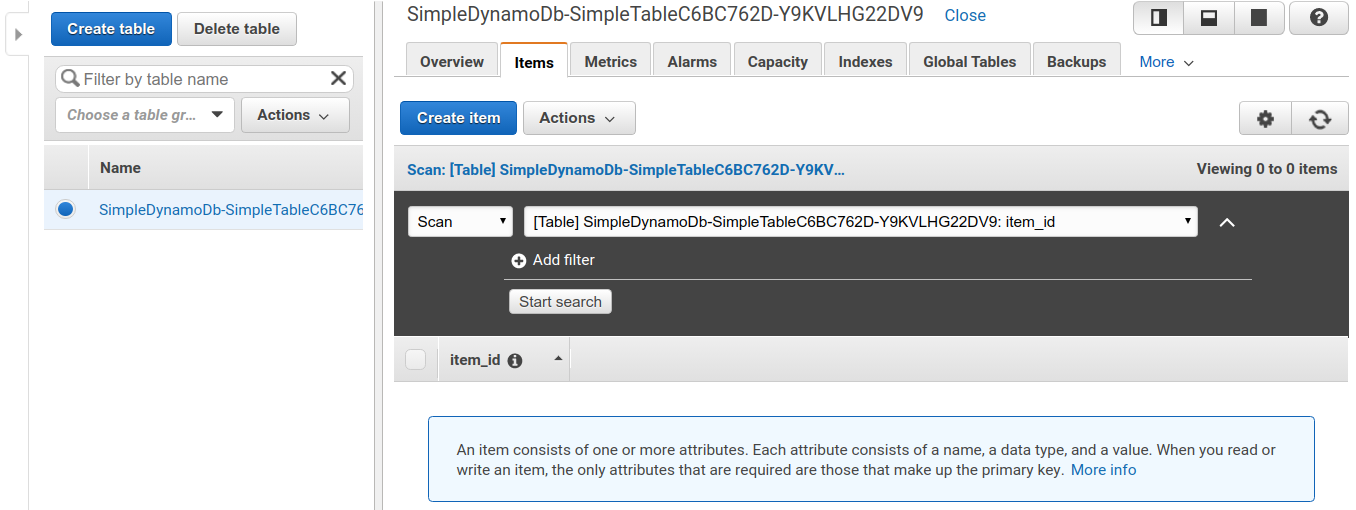

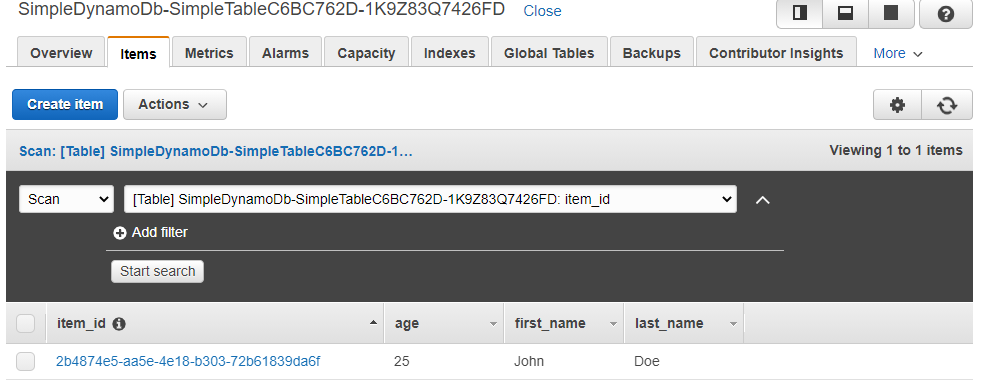

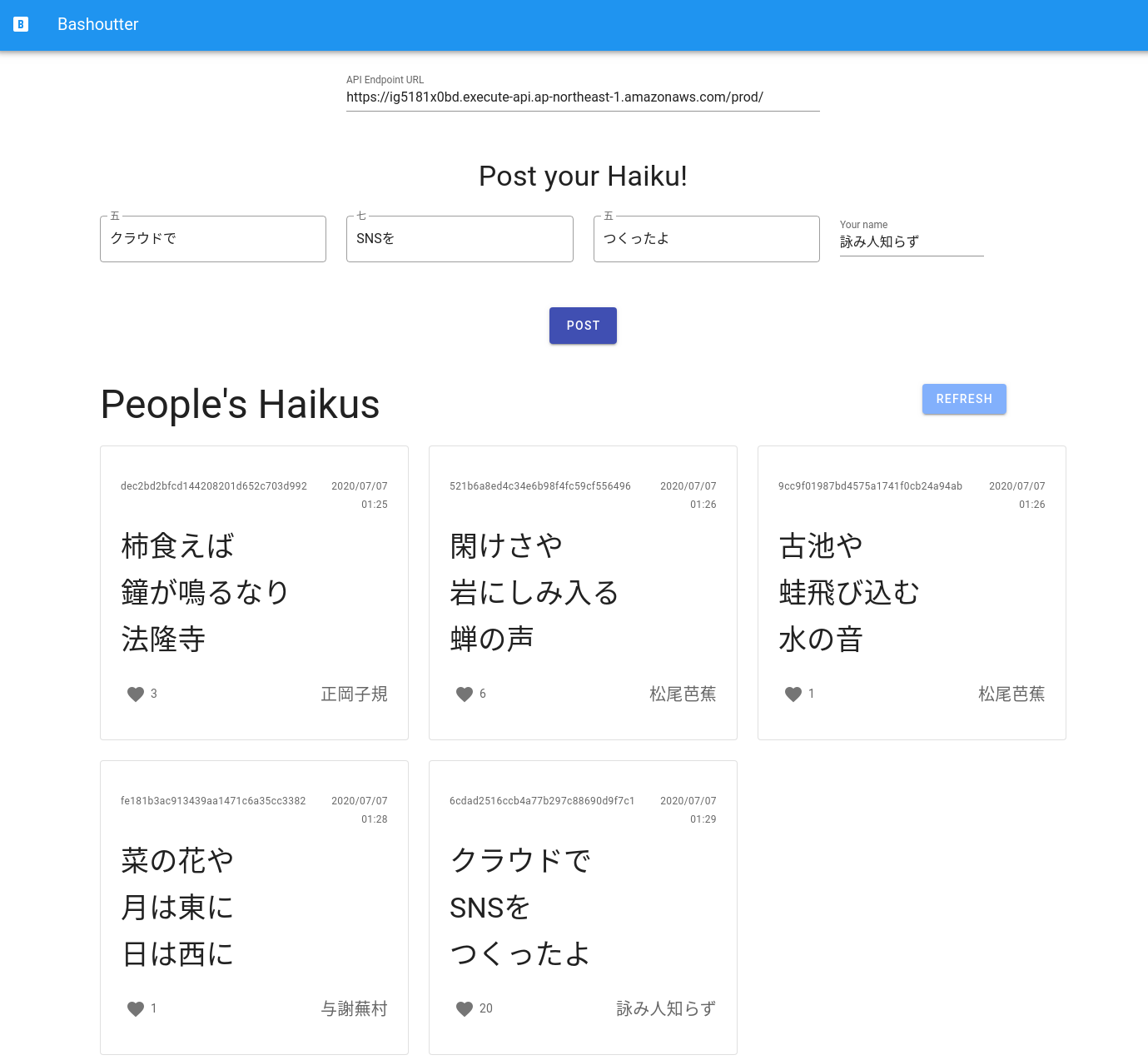

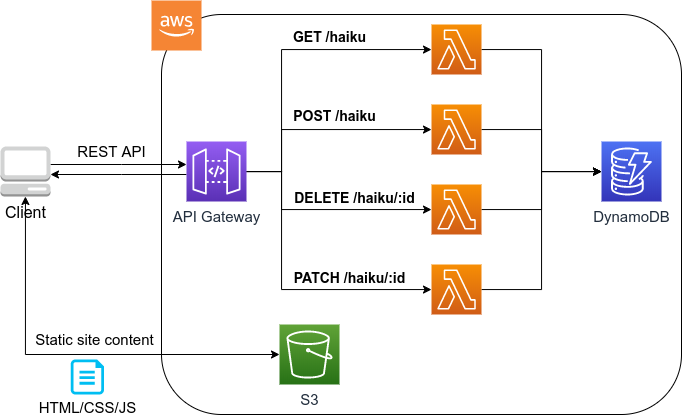

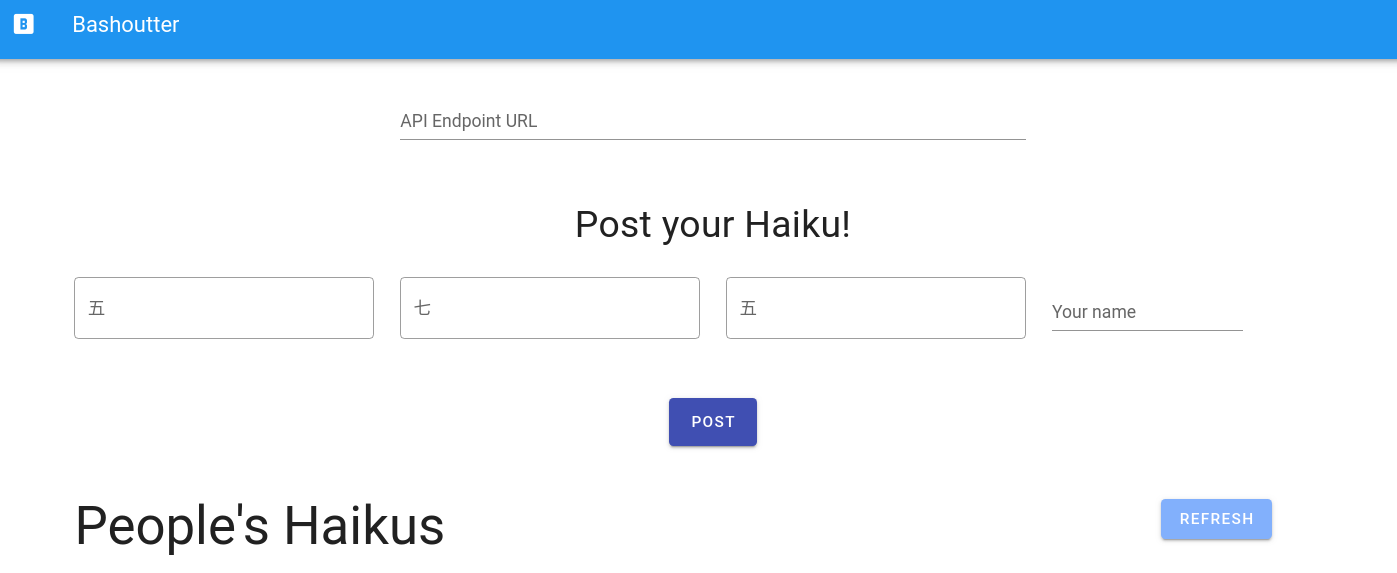

In the third part, we introduce the latest cloud architecture called serverless architecture. This architecture introduces radically different design concept to the cloud than the previous one (often referred to as Serverful), as it allows the processing capacity of the cloud system to be scaled up or down more flexibly depending on the load. In the first hands-on session, we will provide exercises on Lambda, DynamoDB, and S3, which are the main components of the serverless cloud. In addition, we will create a simple yet quite useful social network service (SNS) in the cloud using serverless technology.

These extensive hands-on sessions will provide you with the knowledge and skills to develop your own cloud system on AWS. All of the hands-on programs are designed to be practical, and can be customized for a variety of applications.

1.2. Philosophy of this book

The philosophy of this book can be summed up in one word: "Let’s fly to space in a rocket and look at the earth once!"

What does that mean?

The "Earth" here refers to the whole picture of cloud computing. Needless to say, cloud computing is a very broad and complex concept, and it is the sum of many information technologies, hardware, and algorithms that have been elaborately woven together. Today, many parts of our society, from scientific research to everyday infrastructure, are supported by cloud technology.

The word "rocket" here refers to this lecture. In this lecture, readers will fly into space on a rocket and look at the entire earth (cloud) with their own eyes. In this journey, we do not ask deeply about the detailed machinery of the rocket (i.e. elaborate theories and algorithms). Rather, the purpose of this book is to let you actually touch the cutting edge technologies of cloud computing and realize what kind of views (and applications) are possible from there.

For this reason, this book covers a wide range of topics from the basics to advanced applications of cloud computing. The first part of the book starts with the basics of cloud computing, and the second part takes it to the next level by explaining how to execute machine learning algorithms in the cloud. In the third part, we will explain serverless architecture, a completely new cloud design that has been established in the last few years. Each of these topic is worth more than one book, but this book was written with the ambitious intention of combining them into a single volume and providing a integrative and comprehensive overview.

It may not be an easy ride, but we promise you that if you hang on to this rocket, you will get to see some very exciting sights.

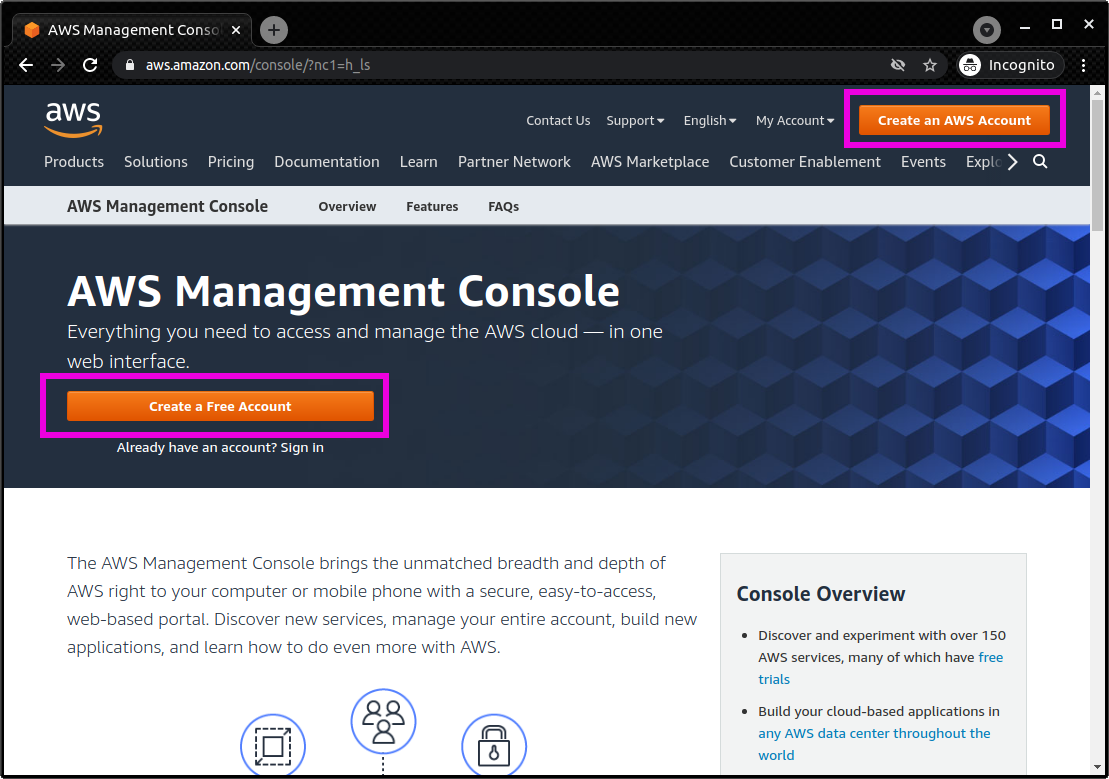

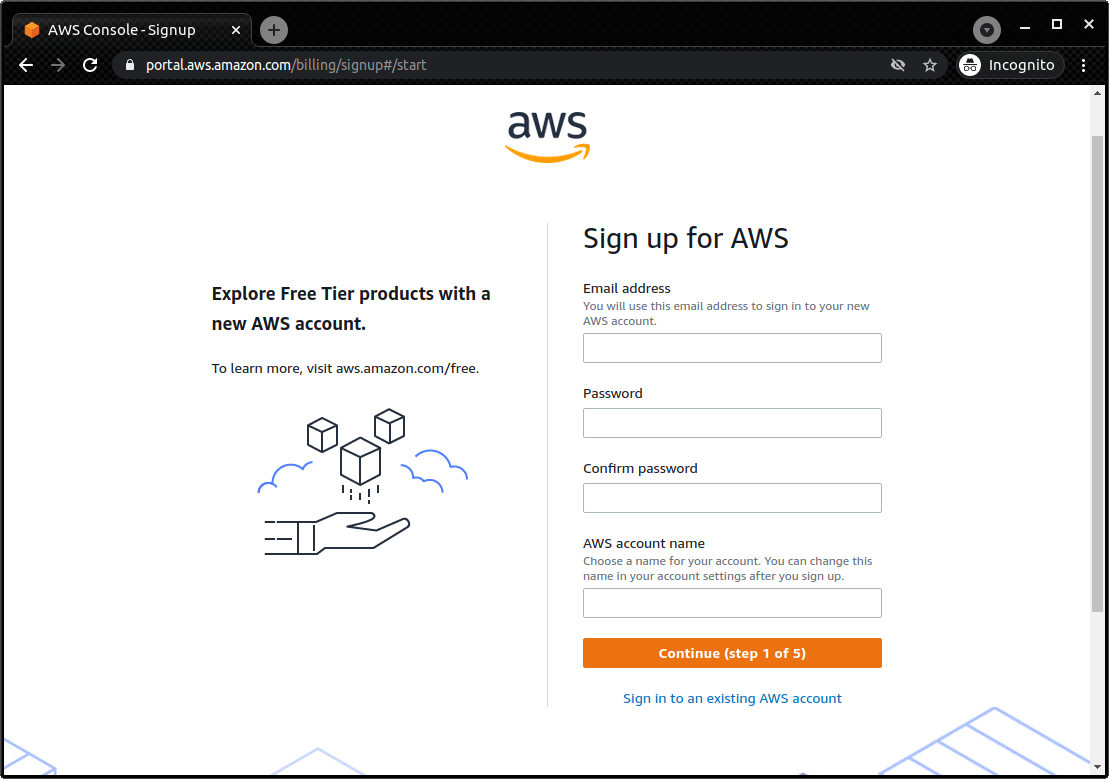

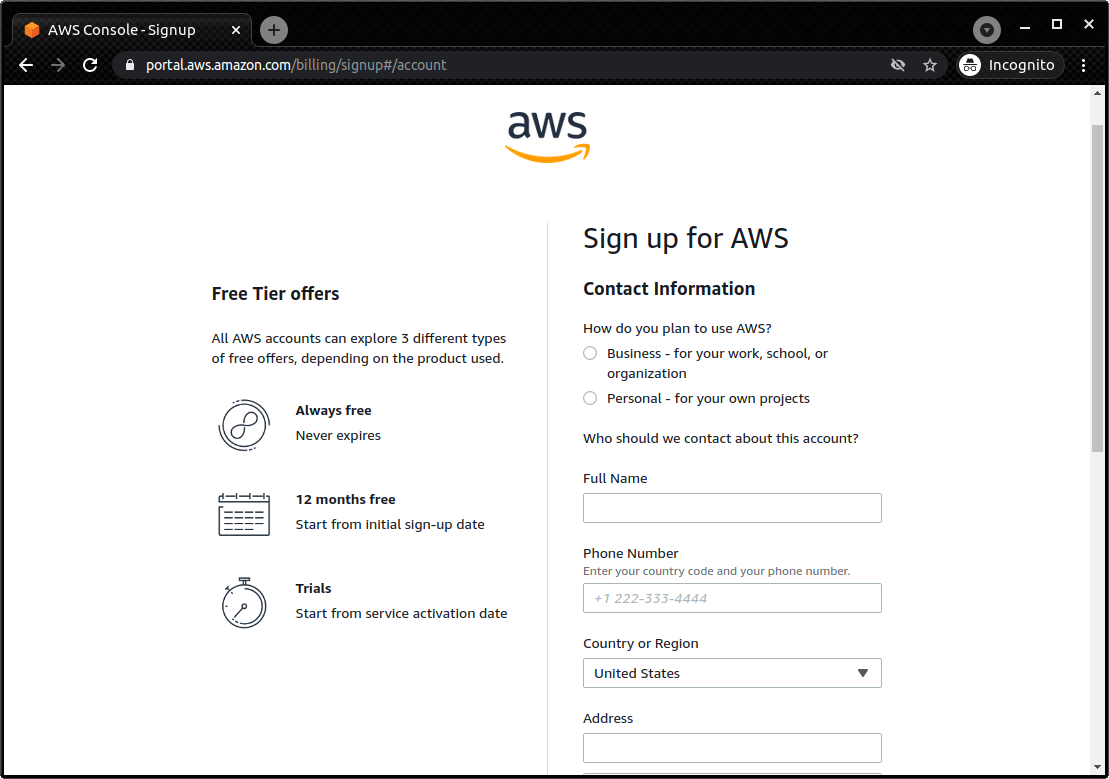

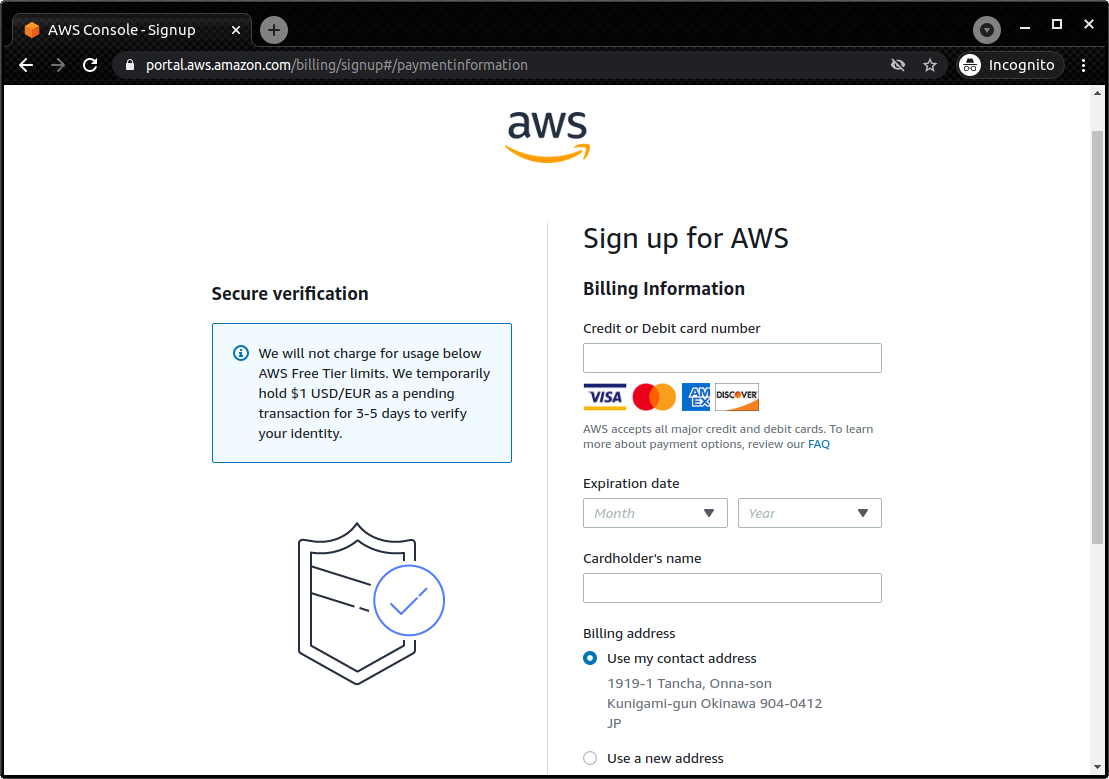

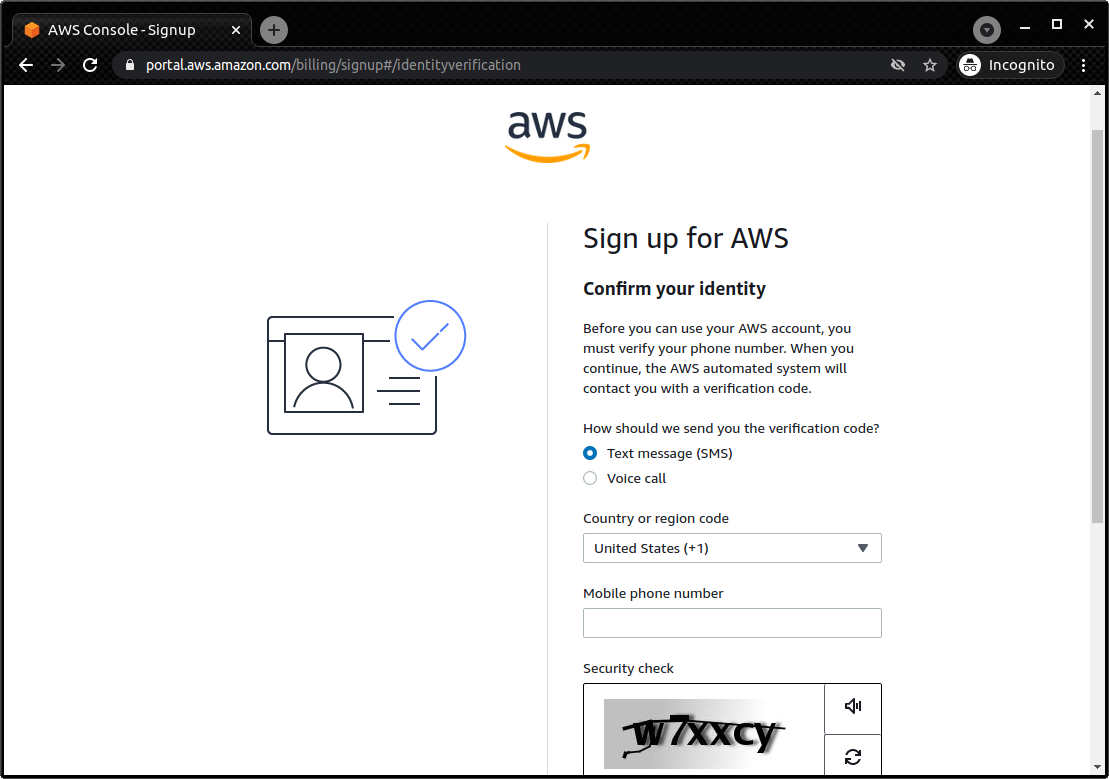

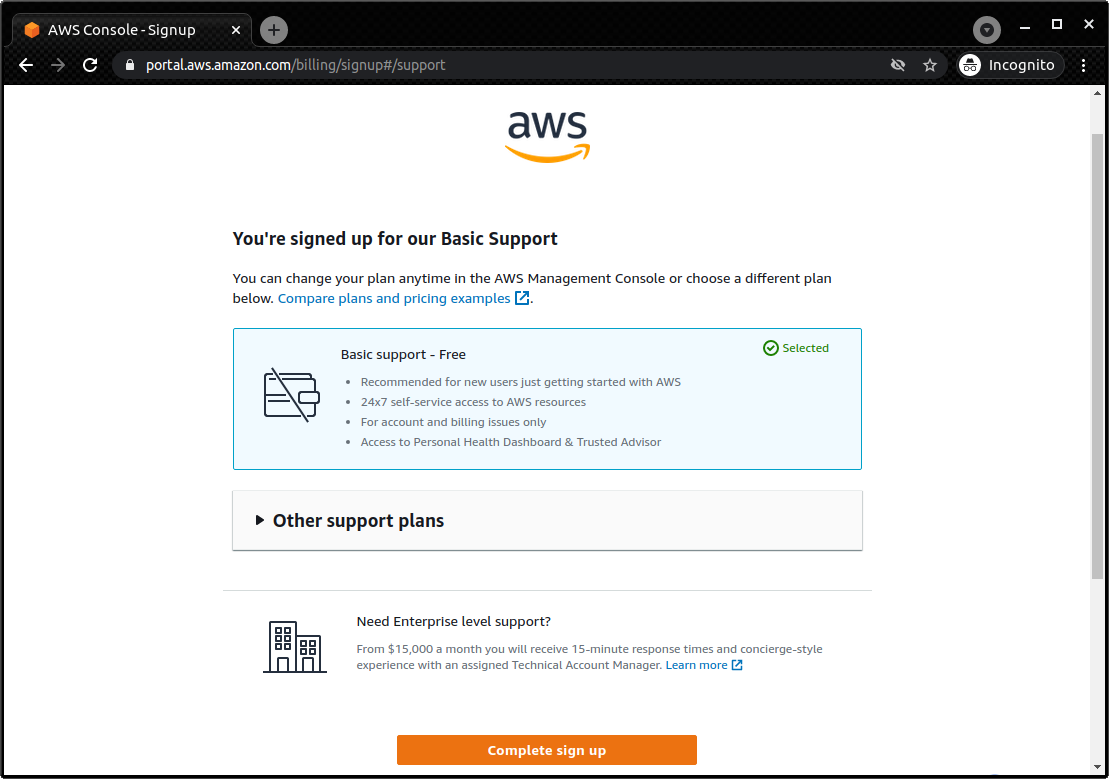

1.3. AWS account

This book provides hands-on tutorials to run and deploy applications on AWS. Readers must have their own AWS account to run the hands-on excercises. A brief description of how to create an AWS account is given in the appendix at the end of the book (Section 14.1), so please refer to it if necessary.

AWS offers free access to some features, and some hands-on excercises can be done for free. Other hands-on sessions (especially those dealing with machine learning) will cost a few dollars. The approximate cost of each hands-on is described at the begining of the excercise, so please be aware of the potential cost.

In addition, when using AWS in lectures at universities and other educational institutions, AWS Educate program is available. This program offers educators various teaching resources, including the AWS credits which students taking the course can use to run applications in the AWS cloud. By using AWS Educate, students can experience AWS without any financial cost. It is also possible for individuals to participate in AWS Educate without going through lectures. AWS Educate provides a variety of learning materials, and I encourage you to take advantage of them.

1.4. Setting up an environment

In this book, we will provide hands-on sessions to deploy a cloud application on AWS. The following computer environment is required to run the programs provided in this book. The installation procedure is described in the appendix at the end of the book (Section 14). Refer to the appendix as necessary and set up an environment in your local computer.

-

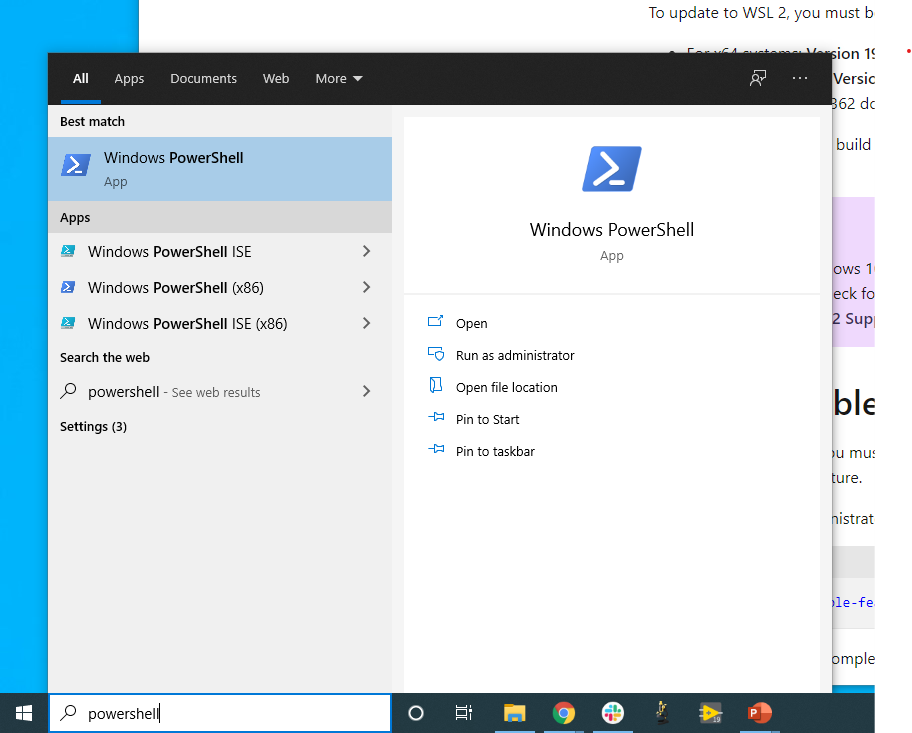

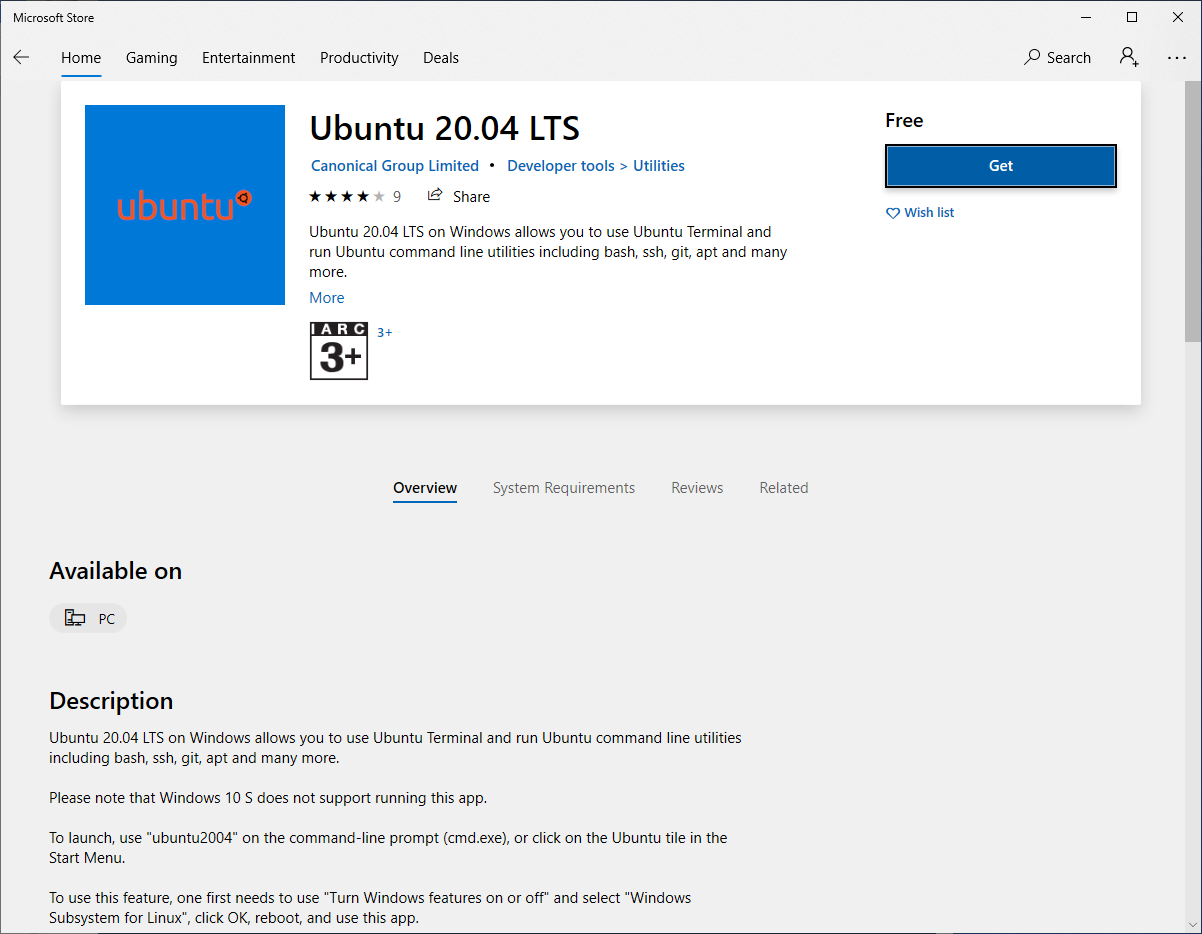

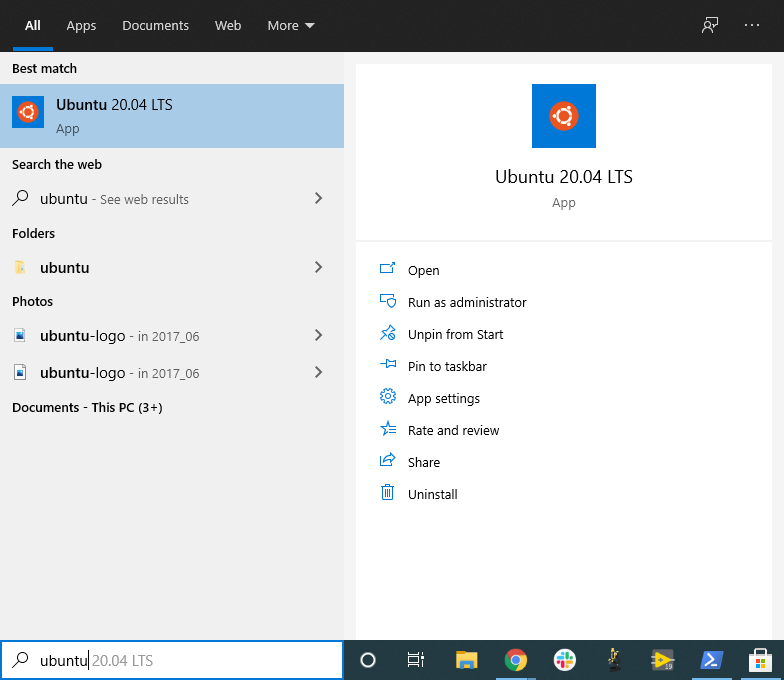

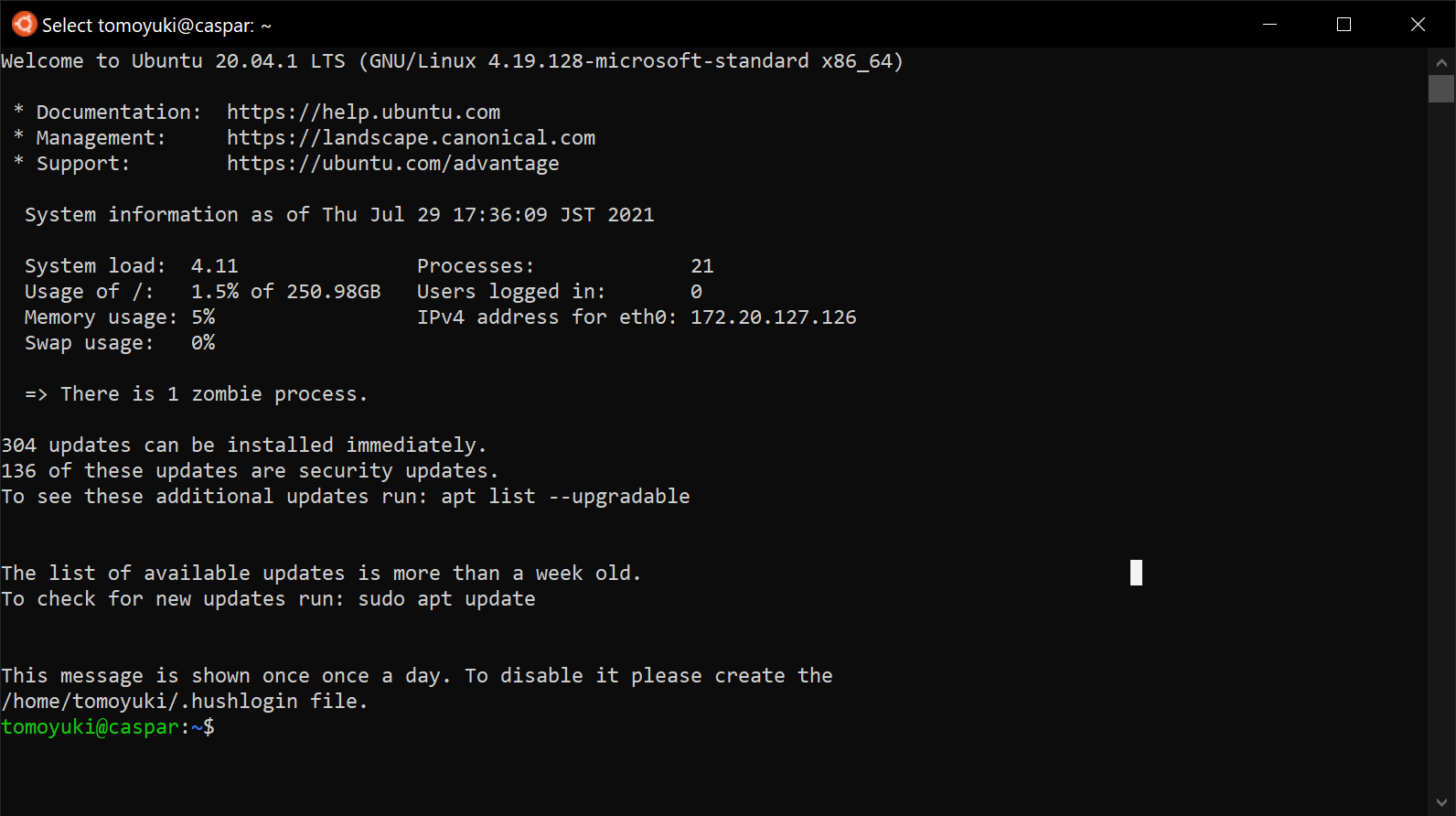

UNIX console: A UNIX console is required to execute the commands and access the server via SSH. Mac or Linux users can use the console (also known as a terminal) that comes standard with the OS. For Windows users, we recommend to install Windows Subsystem for Linux (WSL) and set up a virtual Linux environment (see Section 14.5 for more details).

-

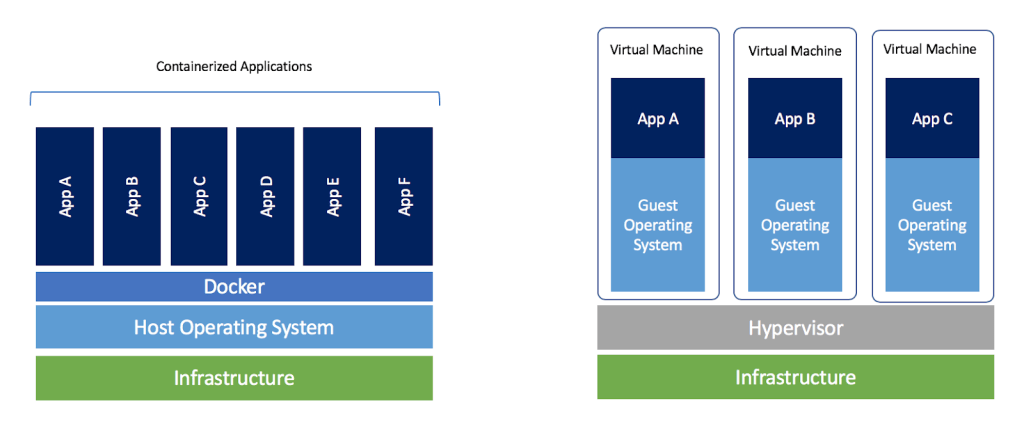

Docker: This book explains how to use a virtual computing environment called Docker. For the installation procedure, see Section 14.6.

-

Python: Version 3.6 or later is required. We will also use

venvmodule to run programs. A quick tutorial onvenvmodule is provided in the appendix (Section 14.7). -

Node.js: Version 12.0 or later is required.

-

AWS CLI: WS CLI Version 2 is required. Refer to Section 14.3 for installation and setup procedure.

-

AWS CDK: Version 1.00 or later is required. The tutorials are not compatible with version 2. Refer to Section 14.4 for installation and setup procedure.

-

AWS secret keys: In order to call the AWS API from the command line, an authentication key (secret key) must be set. Refer to Section 14.3 for the setting of the authentication key.

1.5. Docker image for the hands-on exercise

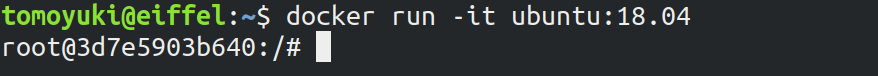

We provide a Docker image with the required programs installed, such as Python, Node.js, and AWS CDK. The source code of the hands-on program has also been included in the image. If you already know how to use Docker, then you can use this image to immediately start the hands-on tutorials without having to install anything else.

Start the the container with the followign command.

$ docker run -it tomomano/labcMore details on this Docker image is given in the appendix (Section 14.8).

1.6. Prerequisite knowledge

The only prerequisite knowledge required to read this book is an elementary level understanding of the computer science taught at the universities (OS, programming, etc.). No further prerequisite knowledge is assumed. There is no need to have any experience using cloud computing. However, the following prior knowledge will help you to understand more smoothly.

-

Basic skills in Python: In this book, we will use Python to write programs. The libraries we will be using are sufficiently abstract that most of the functions make sense just by looking at their names. There is no need to worry if you are not very familiar with Python.

-

Basic skills in Linux command line: When using the cloud, the servers that are launched on the cloud are usually Linux. If you have knowledge of the Linux command line, it will be easier to troubleshoot. If you feel unconfident about using command line, I recommend this book: The Linux Command Line by William Shotts. It is available for free on the web.

1.7. Source code

The source code of the hands-on tutorials is available at the following GitHub repository.

1.8. Notations used in this book

-

Code and shell commands are displayed with

monospace letters -

The shell commands are prefixed with

$symbol to make it clear that they are shell command. The$must be removed when copying and pasting the command. On the other hand, note that the output of a command does not have the$prefix.

In addition, we provide warnings and tips in the boxes.

| Additional comments are provided here. |

| Advanced discussions and ideas are provided here. |

| Common mistakes will be provided here. |

| Mistakes that should never be made will be provided here. |

2. Cloud Computing Basics

2.1. What is the cloud?

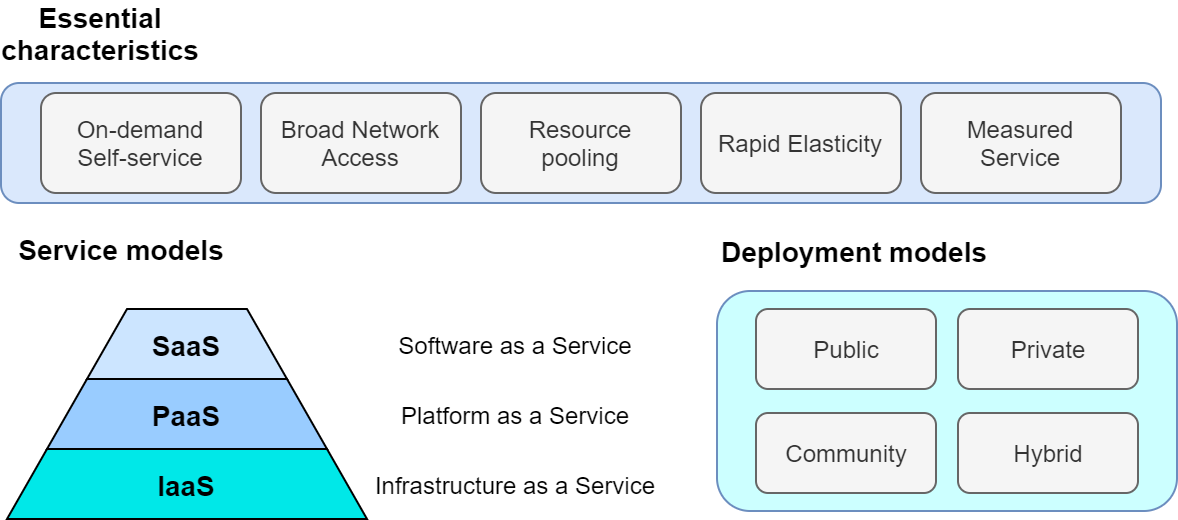

What is the cloud? The term "cloud" has a very broad meaning in itself, so it is difficult to give a strict definition. In academic context, The NIST Definition of Cloud Computing, published by National Institute of Standards and Technology (NIST), is often cited to define cloud computing. The definition and model of cloud described here is illustrated in Figure 2.

According to this, a cloud is a collection of hardware and software that meets the following requirements.

-

On-demand self-service: Computational resources are automatically allocated according to the user’s request.

-

Broad network access: Users can access the cloud through the network.

-

Resource pooling: The cloud provider allocates computational resources to multiple users by dividing the owned computational resources.

-

Rapid elasticity: To be able to quickly expand or reduce computational resources according to the user’s request.

-

Measured service: To be able to measure and monitor the amount of computing resources used.

This may sound too abstract for you to understand. Let’s talk about it in more concrete terms.

If you wanted to upgrade the CPU on your personal computer, you would have to physically open the chassis, expose the CPU socket, and replace it with a new CPU. Or, if the storage is full, you will need to remove the old disk and insert a new one. When the computer is moved to a new location, it will not be able to connect to the network until the LAN cable of the new room is plugged in.

In the cloud, these operations can be performed by commands from a program. If you want 1000 CPUs, you can send a request to the cloud provider. Within a few minutes, you will be allocated 1000 CPUs. If you want to expand your storage from 1TB to 10TB, you can send such command (you may be familiar with this from services such as Google Drive or Dropbox). When you are done using the compute resources, you can tell the provider about it, and the allocation will be deleted immediately. The cloud provider accurately monitors the amount of computing resources used, and calculates the usage fee based on that amount.

Namely, the essence of the cloud is the virtualization and abstraction of physical hardware, and users can manage and operate physical hardware through commands as if it were a part of software. Of course, behind the scenes, a huge number of computers in data centers are running, consuming a lot of power. The cloud provider achieves this virtualization and abstraction by cleverly managing the computational resources in the data center and providing the user with a software interface. From the cloud provider’s point of view, they are able to maximize their profit margin by renting out computers to a large number of users and keeping the data center utilization rate close to 100% at all times.

In the author’s words, the key characteristics of the cloud can be defined as follows:

The cloud is an abstraction of computing hardware. In other words, it is a technology that makes it possible to manipulate, expand, and connect physical hardware as if it were part of software.

Coming back to The NIST Definition of Cloud Computing mentioned above, the following three forms of cloud services are defined (Figure 2).

-

Software as a Service (SaaS)

A form of service that provides users with application running in the cloud. Examples include Google Drive and Slack. The user does not directly touch the underlying cloud infrastructure (network, servers, etc.), but use the cloud services provided as applications.

-

Platform as a Service (PaaS)

A form of service that provides users with an environment for deploying customer-created applications (which in most cases consist of a database and server code for processing API requests). In PaaS, the user does not have direct access to the cloud infrastructure, and the scaling of the server is handled by the cloud provider. Examples include Google App Engine and Heroku.

-

Infrastructure as a Service (IaaS)

A form of service that provides users with actual cloud computing infrastructure on a pay-as-you-go basis. The users rent the necessary network, servers, and storage from the provider, and deploy and operate their own applications on it. An example of IaaS is AWS EC2.

This book mainly deals with cloud development in IaaS. In other words, it is cloud development in which the developer directly manipulates the cloud infrastructure, configures the desired network, server, and storage from scratch, and deploys the application on it. In this sense, cloud development can be divided into two steps: the step of building a program that defines the cloud infrastructure and the step of crafting an application that actually runs on the infrastructure. These two steps can be separated to some extent as a programmer’s skill set, but an understanding of both is essential to build the most efficient and optimized cloud system. This book primarily focuses on the former (operating the cloud infrastructure), but also covers the application layer. PaaS is a concept where the developer focuses on the application layer development and relies on the cloud provider for the cloud infrastructure. PaaS reduces development time by eliminating the need to develop the cloud infrastructure, but has the limitation of not being able to control the detailed behavior of the infrastructure. This book does not cover PaaS techniques and concepts.

SaaS can be considered a development "product" in the context of this book. In other words, the final goal of development is to provide a computational service or database on the available to the general public by deploying the programs on IaaS platform. As a practical demonstration, we will provide hands-on exercises such as creating a simple SNS (Section 13).

Recently, Function as a Service (FaaS) and serverless computing have been recognized as new cloud categories. These concepts will be discussed in detail in later chapters (Section 12). As will become clear as you read through this book, cloud technology is constantly and rapidly evolving. This book first touches on traditional cloud design concepts from a practical and educational point of view, and then covers the latest technologies such as serverless.

Finally, according to The NIST Definition of Cloud Computing, the following four types of cloud deployment model are defined (Figure 2). Private cloud is a cloud used only within a specific organization, group, or company. For example, universities and research institutes often operate large-scale computer servers for their members. In a private cloud, any member of the organization can run computations for free or at a very low cost. However, the upper limit of available computing resources is often limited, and there may be a lack of flexibility when expanding.

Pubclic cloud is a cloud that is offered as a commercial service to general customers. Examples of famous public cloud platforms include Google Cloud Platform (GCP) provided by Google, Azure provided by Microsoft, and Amazon Web Services (AWS) provided by Amazon. When you use a public cloud, you pay the usage cost set by the provider. In return, you get access to the computational resources of the company operating the huge data center, so it is not an exaggeration to say that the computational capacity is inexhaustible.

The third type of cloud operation is called community cloud. This refers to a cloud that is shared and operated by groups and organizations that share the same objectives and roles, such as government agencies. Finally, there is the hybrid cloud, which is a cloud composed of a combination of private, public, and community clouds. An example of hybrid cloud would be a case where some sensitive and privacy-related information is kept in the private cloud, while the rest of the system depends on the public cloud.

This book is basically about cloud development using public clouds. In particular, we will use Amazon Web Services (AWS) to learn specific techniques and concepts. Note, however, that techniques such as server scaling and virtual computing environments are common to all clouds, so you should be able to acquire knowledge that is generally applicable regardless of the cloud platform.

2.2. Why use the cloud?

As mentioned above, the cloud is a computational environment where computational resources can be flexibly manipulated through programs. In this section, we would like to discuss why using the cloud is better than using a real local computing environment.

-

Scalable server size

When you start a new project, it’s hard to know in advance how much compute capacity you’ll ever need. Buying a large server is risky. On the other hand, a server that is too small can be troublesome to upgrade later on. By using the cloud, you can secure the right amount of computing resources you need as you proceed with your project.

-

Free from hardware maintainance

Sadly, computers do get old. With the rate at which technology is advancing these days, after five years, even the newest computers of the day are no more than fossils. Replacing the server every five years would be a considerable hassle. It is also necessary to deal with unexpected failures such as power outages and breakdowns of servers. With cloud computing, there is no need for the user to worry about such things, as the provider automatically takes care of the infrastructure maintenance.

-

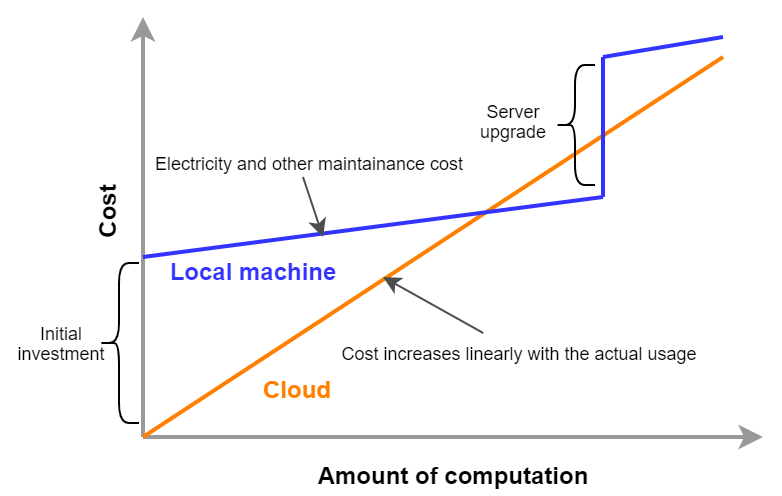

Zero initial cost

Figure 3 shows the economic cost of using your own computing environment versus the cloud. The initial cost of using the cloud is basically zero. After that, the cost increases according to the amount of usage. On the other hand, a large initial cost is incurred when using your own computing environment. After the initial investment, the increase in cost is limited to electricity and server maintenance costs, so the slope is smaller than in the case of using the cloud. Then, after a certain period of time, there may be step-like expenditures for server upgrades. The cloud, on the other hand, incur no such discontinuous increase in cost. In the areas where cost curve of the cloud is below that of local computing environment, using the cloud will lead to economic cost savings.

In particular, point 1 is important in research situations. In research, there are few cases in which one must keep running computations all the time. Rather, the computational load is likely to increase intensively and unexpectedly when a new algorithm is conceived, or when new data arrives. In such cases, the ability to flexibly increase computing power is a major advantage of using the cloud.

So far, we have discussed the advantages of using the cloud, but there are also some disadvantages.

-

The cloud must be used wisely

As shown in the cost curve in Figure 3, depending on your use case, there may be situations where it is more cost effective to use local computing environment. When using the cloud, users are required to manage their computing resources wisely, such as deleting intances immediately after use.

-

Security

The cloud is accessible from anywhere in the world via the Internet, and can be easily hacked if security management is neglected. If the cloud is hacked, not only will information be leaked, but there is also the possibility of financial loss.

-

Learning Curve

As described above, there are many points to keep in mind when using the cloud, such as cost and security. In order to use the cloud wisely, it is indispensable to have a good understanding of the cloud and to overcome the learning curve.

3. Introduction to AWS

3.1. What is AWS?

In this book, AWS is used as the platform for implementing cloud applications. In this chapter, we will explain the essential knowledge of AWS that is required for hands-on tutorials.

AWS (Amazon Web Services) is a general cloud platform provided by Amazon. AWS was born in 2006 as a cloud service that leases vast computing resources that Amazon owns. In 2021, AWS holds the largest market share (about 32%) as a cloud provider (Ref). Many web-related services, including Netflix and Slack, have some or all of their server resources provided by AWS. Therefore, most of the readers would be benefiting from AWS without knowing it.

Because it has the largest market share, it offers a wider range of functions and services than any other cloud platforms. In addition, reflecting the large number of users, there are many official and third-party technical articles on the web, which is helpful in learning and debugging. In the early days, most of the users were companies engaged in web business, but recently, there is a growing number of users embracing AWS for scientific and engineering research.

3.2. Functions and services provided by AWS

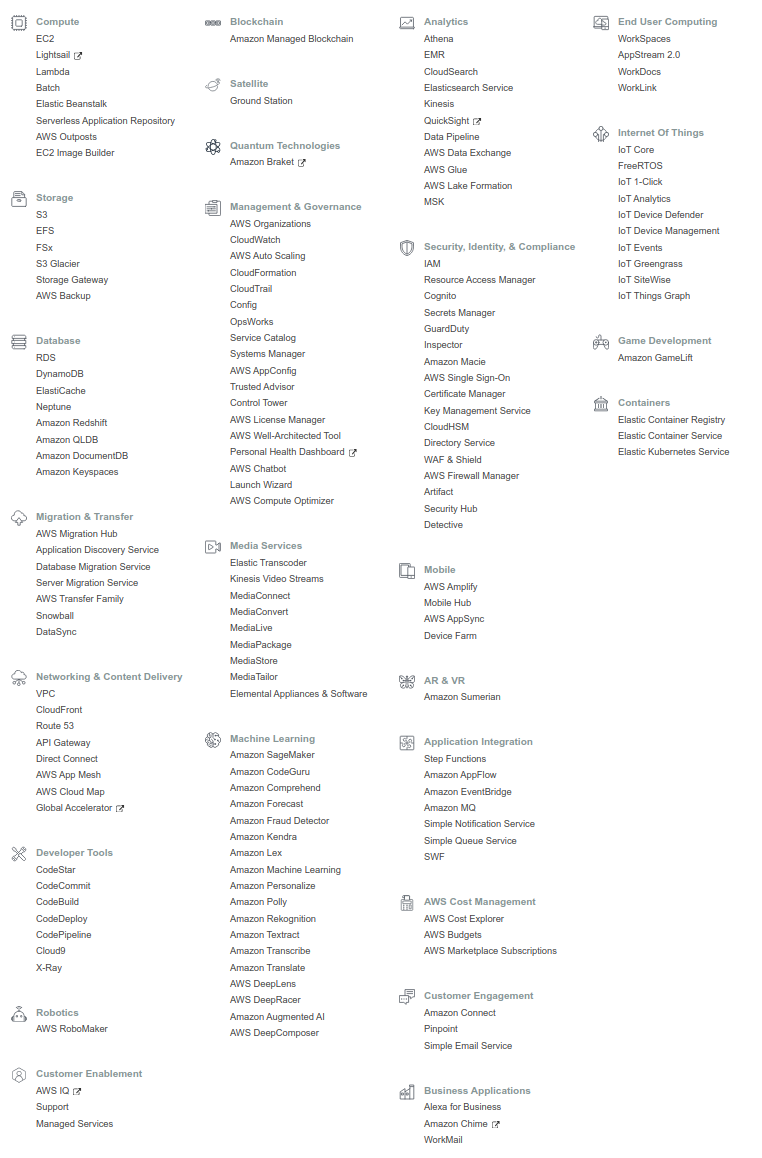

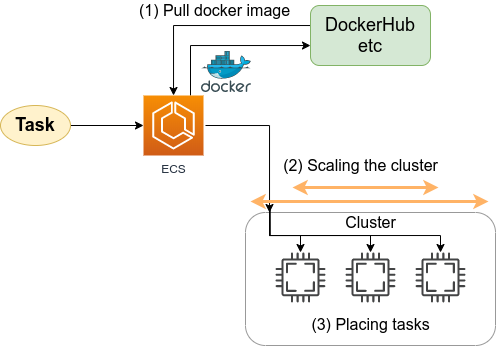

Figure 4 shows a list of the major services provided by AWS at the time of writing.

The various elements required to compose a cloud, such as computation, storage, database, network, and security, are provided as independent components. Essentially, a cloud system is created by combining these components. There are also pre-packaged services for specific applications, such as machine learning, speech recognition, and augmented reality (AR) and virtual reality (VR). In total, there are more than 170 services provided.

AWS beginners often fall into a situation where they are overwhelmed by the large number of services and left at a loss. It’s not even clear what concepts to learn and in what order, and this is undoubtedly a major barrier to entry. However, the truth is that the essential components of AWS are limited to just a couple. If you know how to use the essential components, you are almost ready to start developing on AWS. Many of the other services are combinations of the basic elements that AWS has prepared as specialized packages for specific applications. Recognizing this point is the first step in learning AWS.

Here, we list the essential components for building a cloud system on AWS. You will experience them while writing programs in the hands-on sessions in later chapters. At this point, it is enough if you could just memorize the names in a corner of your mind.

3.2.1. computation

![]() EC2 (Elastic Compute Cloud)

Virtual machines with various specifications can be created and used to perform calculations.

This is the most basic component of AWS.

We will explore more on EC2 in later chapters (Section 4, Section 6, Section 9).

EC2 (Elastic Compute Cloud)

Virtual machines with various specifications can be created and used to perform calculations.

This is the most basic component of AWS.

We will explore more on EC2 in later chapters (Section 4, Section 6, Section 9).

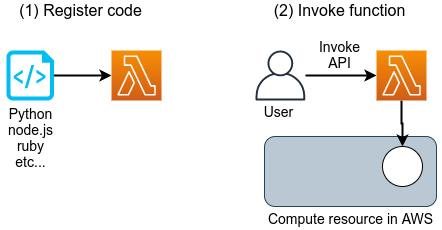

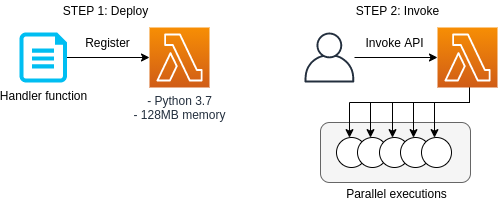

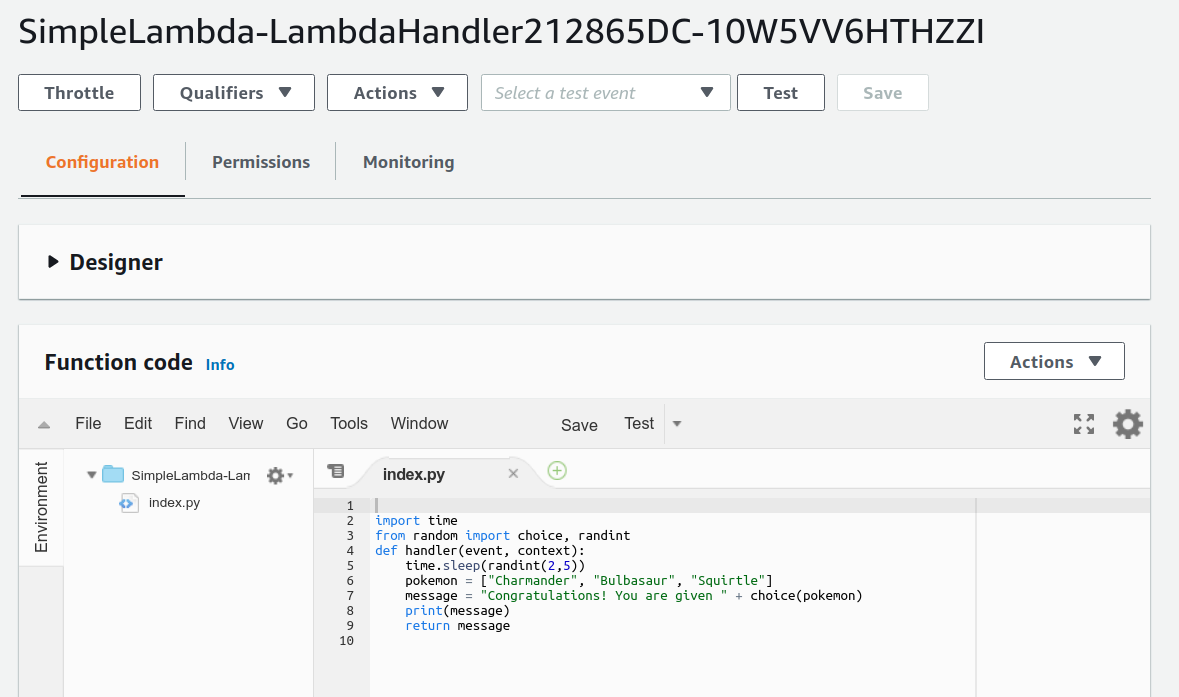

![]() Lambda

Lambda is a part of the cloud called Function as a Service (FaaS), a service for performing small computations without a server.

It will be described in detail in the chapter on serverless architecture (Section 11).

Lambda

Lambda is a part of the cloud called Function as a Service (FaaS), a service for performing small computations without a server.

It will be described in detail in the chapter on serverless architecture (Section 11).

3.2.2. Storage

![]() EBS (Elastic Block Store)

A virtual data drive that can be assigned to EC2.

Think of a "conventional" file system as used in common operating systems.

EBS (Elastic Block Store)

A virtual data drive that can be assigned to EC2.

Think of a "conventional" file system as used in common operating systems.

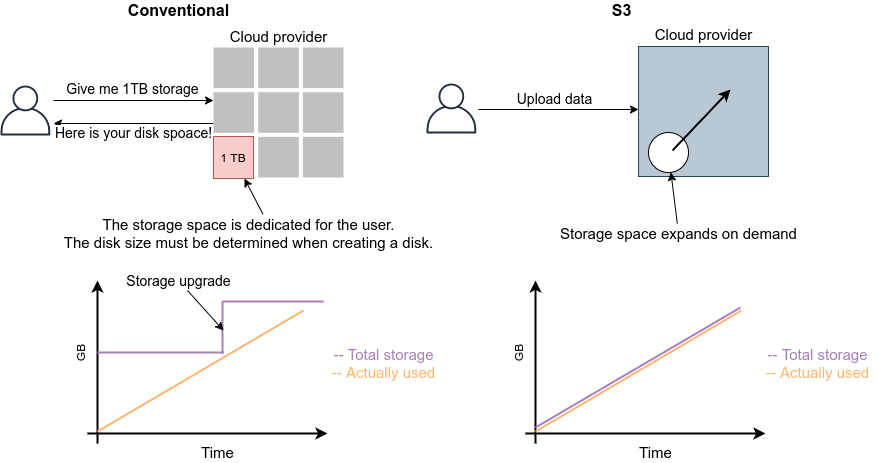

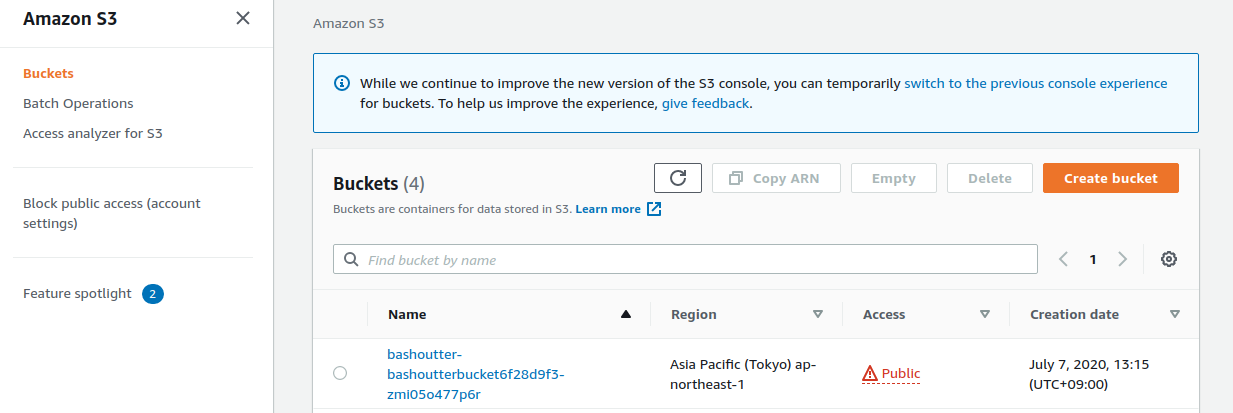

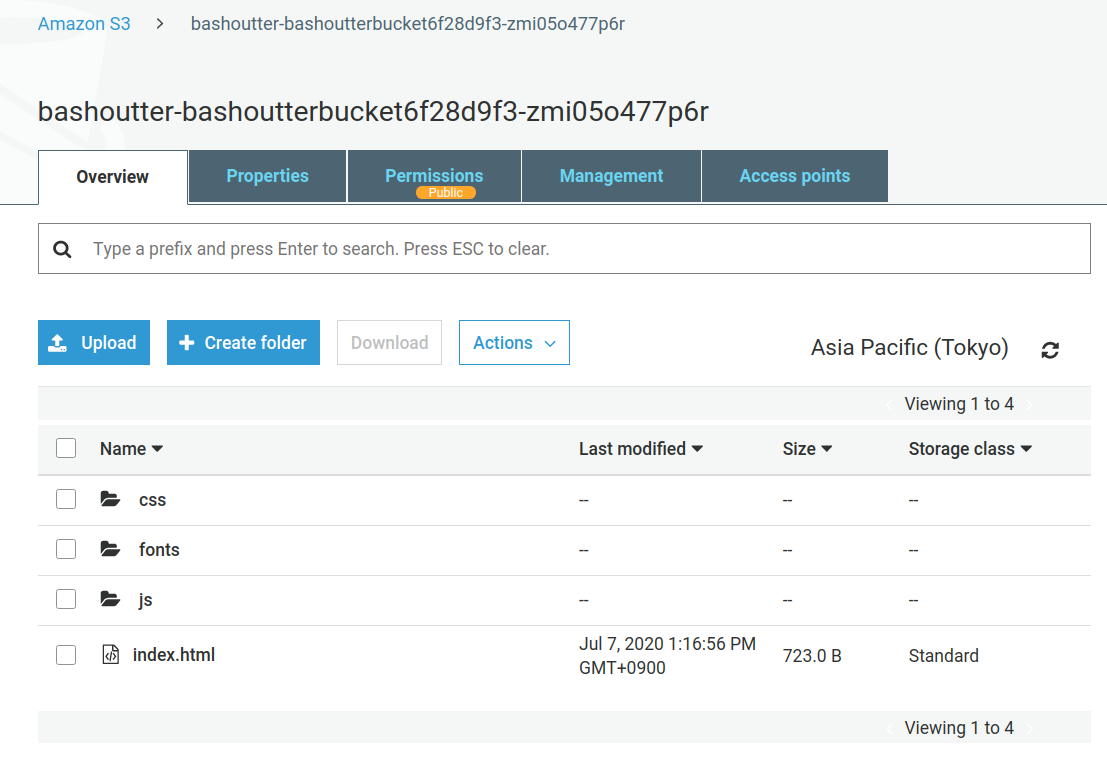

![]() S3 (Simple Storage Service)

S3 is a "cloud-bative" data storage system called Object Storage, which uses APIs to read and write data.

It will be described in detail in the chapter on serverless architecture (Section 11).

S3 (Simple Storage Service)

S3 is a "cloud-bative" data storage system called Object Storage, which uses APIs to read and write data.

It will be described in detail in the chapter on serverless architecture (Section 11).

3.2.3. Database

![]() DynamoDB

DynamoDB is a NoSQL type database service (think of

DynamoDB

DynamoDB is a NoSQL type database service (think of mongoDB if you know it).

It will be described in detail in the chapter on serverless architecture (Section 11).

3.2.4. Networking

![]() VPC(Virtual Private Cloud)

With VPC, one can create a virtual network environment on AWS, define connections between virtual servers, and manage external access.

EC2 must be placed inside a VPC.

VPC(Virtual Private Cloud)

With VPC, one can create a virtual network environment on AWS, define connections between virtual servers, and manage external access.

EC2 must be placed inside a VPC.

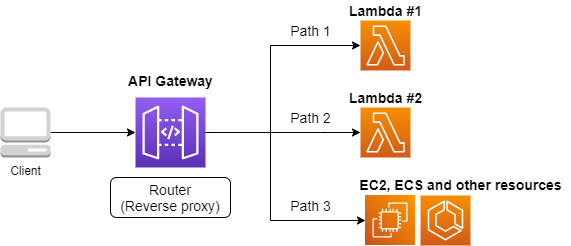

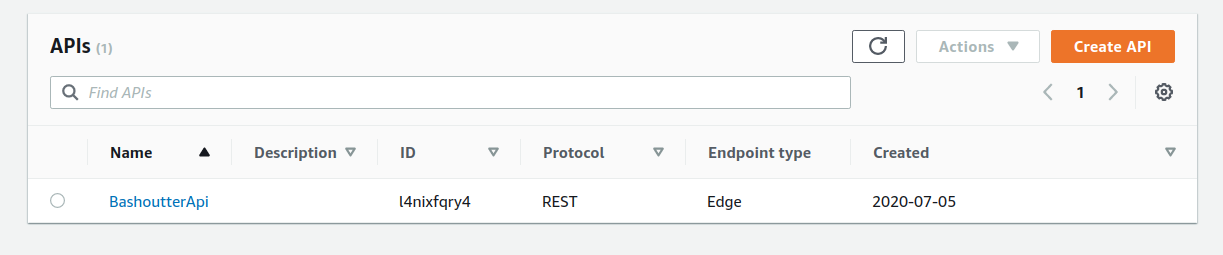

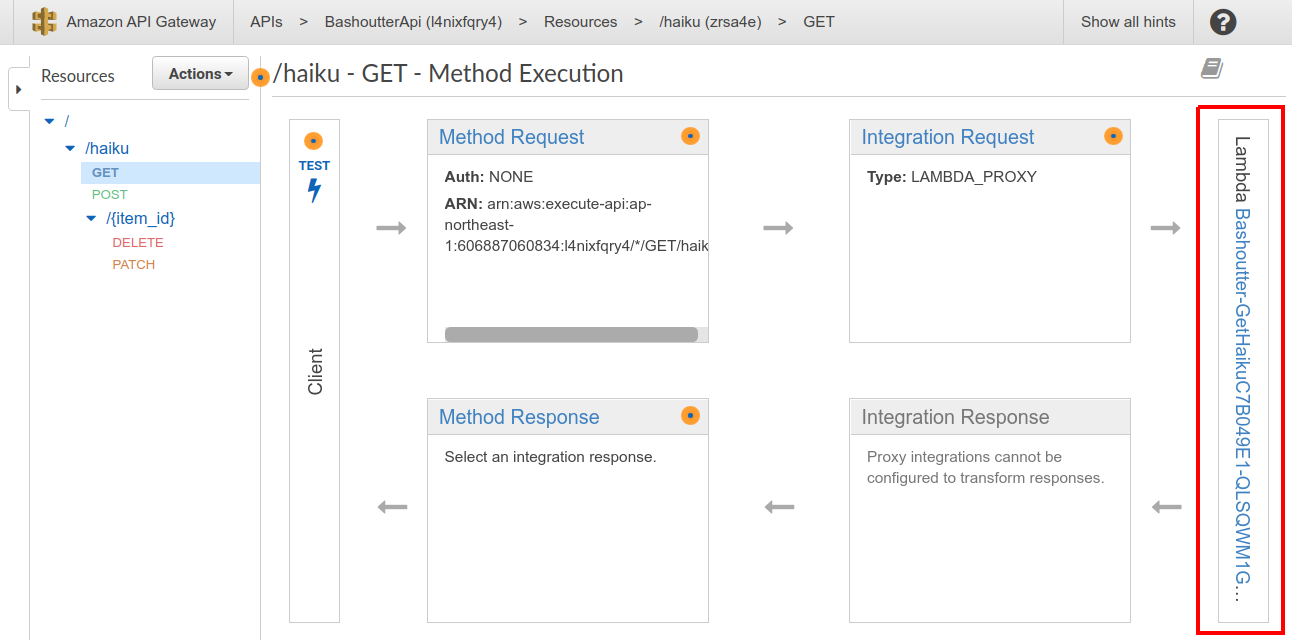

API Gateway

![]() API Gateway acts as a reverse proxy to connect API endpoints to backend services (such as Lambda).

It will be described in detail in Section 13.

API Gateway acts as a reverse proxy to connect API endpoints to backend services (such as Lambda).

It will be described in detail in Section 13.

3.3. Regions and Availability Zones

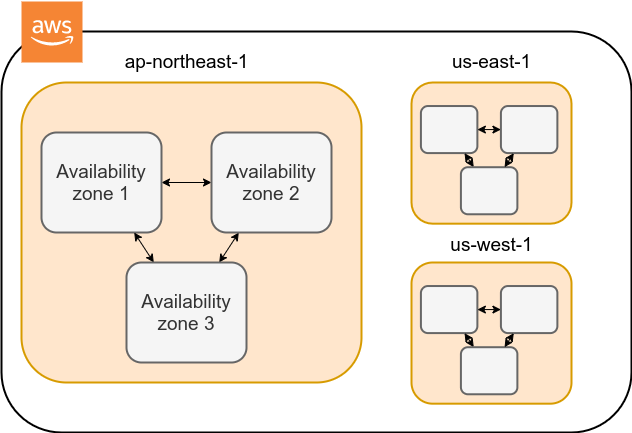

One of the most important concepts you need to know when using AWS is Region and Availability Zone (AZ) (Figure 5). In the following, we will briefly describe these concepts. For more detailed information, also see official documentation "Regions, Availability Zones, and Local Zones".

A region roughly means the location of a data center.

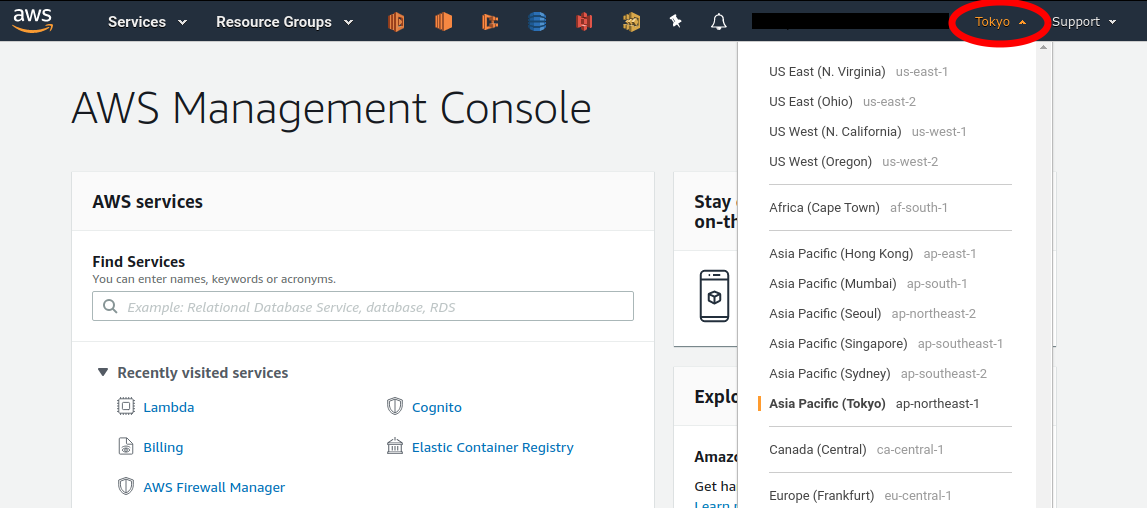

At the time of writing, AWS has data centers in 25 geographical locations around the world, as shown in Figure 6.

In Japan, there are data centers in Tokyo and Osaka.

Each region has a unique ID, for example, Tokyo is defined as ap-northeast-1, Ohio as us-east-2, and so on.

When you log in to the AWS console, you can select a region from the menu bar at the top right of the screen (Figure 7, circled in red). AWS resources such as EC2 are completely independent for each region. Therefore, when deploying new resources or viewing deployed resources, you need to make sure that the console region is set correctly. If you are developing a web business, you will need to deploy the cloud in various parts of the world. However, if you are using it for personal research, you are most likely fine just using the nearest region (e.g. Tokyo).

An Avaialibity Zone (AZ) is a data center that is geographically isolated within a region. Each region has two or more AZs, so that if a fire or power failure occurs in one AZ, the other AZs can cover the failure. In addition, the AZs are connected to each other by high-speed dedicated network lines, so data transfer between AZs is extremely fast. AZ is a concept that should be taken into account when server downtime is unacceptable, such as in web businesses. For personal use, there is no need to be concerned much about it. It is sufficient to know the meaning of the term.

|

When using AWS, which region should you select? In terms of Internet connection speed, it is generally best to use the region that is geographically closest to you. On the other hand, EC2 usage fees, etc., are priced slightly differently for each region. Therefore, it is also important to choose the region with the lowest price for the services that you use most frequently. In addition, some services may not be available in a particular region. It is best to make an overall judgment based on these points. |

3.4. Cloud development in AWS

Now that you have a general understanding of the AWS cloud, the next topic will be an overview of how to develop and deploy a cloud system on AWS.

There are two ways to perform AWS operations such as adding, editing, and deleting resources: using the console and using the API.

3.4.1. Operating the resources through the console

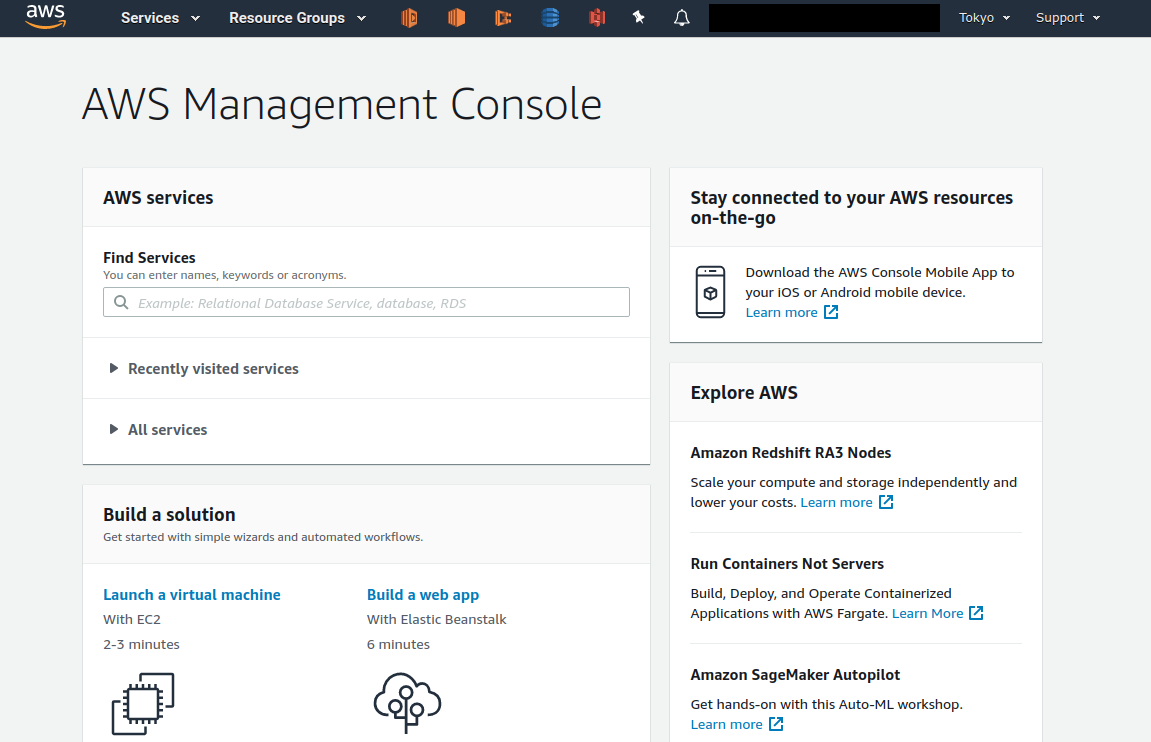

When you log in to your AWS account, the first thing you will see is the AWS Management Console (Figure 8).

|

In this book we will often call AWS Management Console AWS console or just a console. |

Using the console, you can peform any operations on AWS resources through a GUI (Graphical User Interface), such as launching EC2 instances, adding and deleting data in S3, viewing logs, and so on. AWS console is very useful when you are trying out a new function for the first time or debugging the system.

The console is useful for quickly testing functions and debugging the cloud under development, but it is rarely used directly in actual cloud development. Rather, it is more common to use the APIs to describe cloud resources programmatically. For this reason, this book does not cover how to use AWS console. The AWS documentation includes many tutorials which describe how to perform various operations from the AWS console. They are valuable resources for learning.

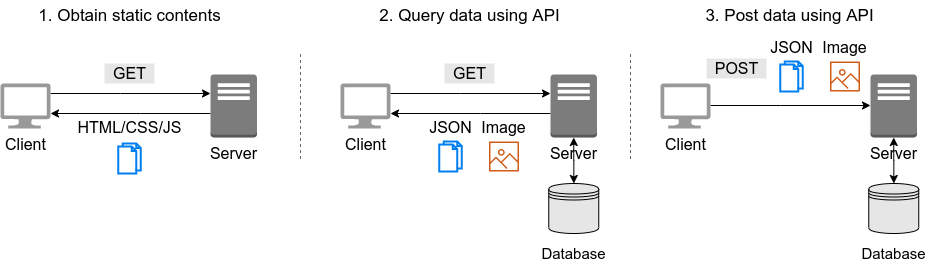

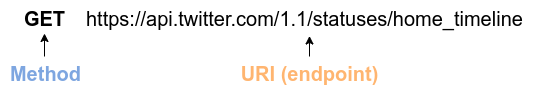

3.4.2. Operating the resources through the APIs

By using API (Application Programming Interface), you can send commands to AWS and manipulate cloud resources. APIs are simply a list of commands exposed by AWS, and consisted of REST APIs (REST APIs are explained in Section 10.2). However, directly entering the REST APIs can be tedious, so various tools are provided to interact with AWS APIs more conveniently.

For example, AWS CLI is a command line interface (CLI) to execute AWS APIs through UNIX console. In addition to the CLI, SDKs (Software Development Kits) are available in a variety of programming languages. Some examples are listed below.

-

Python ⇒ boto3

-

Ruby ⇒ AWS SDK for Ruby

-

Node.js ⇒ AWS SDK for Node.js

Let’s look at a some of the API examples.

Let’s assume that you want to add a new storage space (called a Bucket) to S3.

If you use the AWS CLI, you can type a command like the following.

$ aws s3 mb s3://my-bucket --region ap-northeast-1The above command will create a bucket named my-bucket in the ap-northeast-1 region.

To perform the same operation from Python, use the boto3 library and run a script like the following.

1

2

3

4

import boto3

s3_client = boto3.client("s3", region_name="ap-northeast-1")

s3_client.create_bucket(Bucket="my-bucket")

Let’s look at another example.

To start a new EC2 instance (an instance is a virtual server that is in the running state), use the following command.

$ aws ec2 run-instances --image-id ami-xxxxxxxx --count 1 --instance-type t2.micro --key-name MyKeyPair --security-group-ids sg-903004f8 --subnet-id subnet-6e7f829eThis command will launch a t2.micro instance with 1 vCPU and 1.0 GB RAM. We’ll explain more about this command in later chapter (Section 4).

To perform the same operation from Python, use a script like the following.

1

2

3

4

5

6

7

8

9

10

11

12

import boto3

ec2_client = boto3.client("ec2")

ec2_client.run_instances(

ImageId="ami-xxxxxxxxx",

MinCount=1,

MaxCount=1,

KeyName="MyKeyPair",

InstanceType="t2.micro",

SecurityGroupIds=["sg-903004f8"],

SubnetId="subnet-6e7f829e",

)

Through the above examples, we hope you are starting to get an idea of how APIs can be used to manipulate cloud resources. With a single command, you can start a new virtual server, add a data storage area, or perform any other operation you want. By combining multiple commands like this, you can build a computing environment with the desired CPU, RAM, network, and storage. Of course, the delete operation can also be performed using the API.

3.4.3. Mini hands-on: Using AWS CLI

In this mini hands-on, we will learn how to use AWS CLI.

As mentioned earlier, AWS CLI can be used to manipulate any resource on AWS, but here we will practice the simplest case, reading and writing files using S3.

(EC2 operations are a bit more complicated, so we will cover them in Section 4).

For detailed usage of the aws s3 command, please refer to official documentation.

|

For information on installing the AWS CLI, see Section 14.3. |

|

The hands-on exercise described below can be performed within the free S3 tier. |

|

Before executing the following commands, make sure that your AWS credentials are set correctly.

This requires that the settings are written to the file |

To begin with, let’s create a data storage space (called a Bucket) in S3.

$ bucketName="mybucket-$(openssl rand -hex 12)"

$ echo $bucketName

$ aws s3 mb "s3://${bucketName}"Since the name of an S3 bucket must be unique across AWS, the above command generates a bucket name that contains a random string and stores it in a variable called bucketName.

Then, a new bucket is created by aws s3 mb command (mb stands for make bucket).

Next, let’s obtain a list of the buckets.

$ aws s3 ls

2020-06-07 23:45:44 mybucket-c6f93855550a72b5b66f5efeWe can see that the bucket we just created is in the list.

|

As a notation in this book, terminal commands are prefixed with |

Next, we upload the files to the bucket.

$ echo "Hello world!" > hello_world.txt

$ aws s3 cp hello_world.txt "s3://${bucketName}/hello_world.txt"Here, we generated a dummy file hello_world.txt and uploaded it to the bucket.

Now, let’s obtain a list of the files in teh bucket.

$ aws s3 ls "s3://${bucketName}" --human-readable

2020-06-07 23:54:19 13 Bytes hello_world.txtWe can see that the file we just uploaded is in the list.

Lastly, we delete the bucket we no longer use.

$ aws s3 rb "s3://${bucketName}" --forcerb stands for remove bucket.

By default, you cannot delete a bucket if there are files in it.

By adding the --force option, a non-empty bucket are forced to be deleted.

As we just saw, we were able to perform a series of operations on S3 buckets using the AWS CLI. In the same manner, you can use the AWS CLI to perform operation on EC2, Lambda, DynamoDB, and any other resources.

|

Amazon Resource Name (ARN). Every resource on AWS is assigned a unique ID called Amazon Resource Name (ARN).

ARNs are written in a format like In addition to ARNs, it is also possible to define human-readable names for S3 buckets and EC2 instances. In this case, either the ARN or the name can be used to refer to the same resource. |

3.5. CloudFormation and AWS CDK

As mentioned in the previous section, AWS APIs can be used to create and manage any resources in the cloud. Therefore, in principle, you can construct cloud systems by combining API commands.

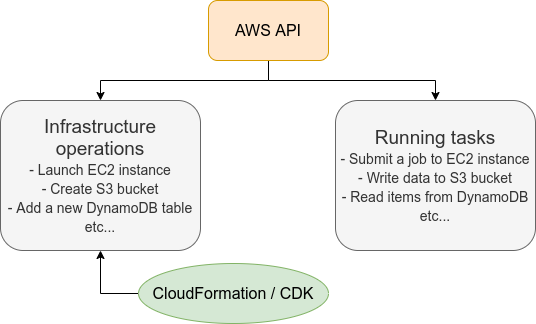

However, there is one practical point that needs to be considered here. The AWS API can be broadly divided into commands to manipulate resources and commands to execute tasks (Figure 9).

Manipulating resources refers to preparing static resources, such as launching an EC2 instance, creating an S3 bucket, or adding a new table to a database. Such commands need to be executed only once, when the cloud is deployed.

Commands to execute tasks refer to operations such as submitting a job to an EC2 instance or writing data to an S3 bucket. It describes the computation that should be performed within the premise of a static resource such as EC2 instance or S3 bucket. Compared to the former, the latter can be regarded as being in charge of dynamic operations.

From this point of view, it would be clever to manage programs describing the infrastructure and programs executing tasks separately. Therefore, the development of a cloud can be divided into two steps: one is to create programs that describe the static resources of the cloud, and the other is to create programs that perform dynamic operations.

CloudFormation is a mechanism for managing static resources in AWS. CloudFormation defines the blueprint of the cloud infrastructure using text files that follow the CloudFormation syntax. CloudFormation can be used to describe resource requirements, such as how many EC2 instances to launch, with what CPU power and networks configuration, and what access permissions to grant. Once a CloudFormation file has been crafted, a cloud system can be deployed on AWS with a single command. In addition, by exchanging CloudFormation files, it is possible for others to easily reproduce an identical cloud system. This concept of describing and managing cloud infrastructure programmatically is called Infrastructure as Code (IaC).

CloudFormation usually use a format called JSON (JavaScript Object Notation). The following code is an example excerpt of a CloudFormation file written in JSON.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

"Resources" : {

...

"WebServer": {

"Type" : "AWS::EC2::Instance",

"Properties": {

"ImageId" : { "Fn::FindInMap" : [ "AWSRegionArch2AMI", { "Ref" : "AWS::Region" },

{ "Fn::FindInMap" : [ "AWSInstanceType2Arch", { "Ref" : "InstanceType" }, "Arch" ] } ] },

"InstanceType" : { "Ref" : "InstanceType" },

"SecurityGroups" : [ {"Ref" : "WebServerSecurityGroup"} ],

"KeyName" : { "Ref" : "KeyName" },

"UserData" : { "Fn::Base64" : { "Fn::Join" : ["", [

"#!/bin/bash -xe\n",

"yum update -y aws-cfn-bootstrap\n",

"/opt/aws/bin/cfn-init -v ",

" --stack ", { "Ref" : "AWS::StackName" },

" --resource WebServer ",

" --configsets wordpress_install ",

" --region ", { "Ref" : "AWS::Region" }, "\n",

"/opt/aws/bin/cfn-signal -e $? ",

" --stack ", { "Ref" : "AWS::StackName" },

" --resource WebServer ",

" --region ", { "Ref" : "AWS::Region" }, "\n"

]]}}

},

...

},

...

},

Here, we have defined an EC2 instance named "WebServer". This is a rather long and complex description, but it specifies all necessary information to create an EC2 instance.

3.5.1. AWS CDK

As we saw in the previous section, CloudFormation is very complex to write, and there must not be any errors in any lines. Further, since CloudFormation is written with JSON, we cannot use useful concepts such as variables and classes as we do in modern programming languages (strictly speaking, CloudFormation has functions that are equivalent to variables). In addition, many parts of the CloudFormation files are repetitive, and many parts can be automated.

To solve this programmer’s pain, AWS Cloud Development Kit (CDK) is offered by AWS. CDK is a tool that automatically generates CloudFormations using a programming language such as Python. CDK is a relatively new tool, released in 2019, and is being actively developed (check the releases at GitHub repository to see how fast this library is being improved). CDK is supported by several languages including TypeScript (JavaScript), Python, and Java.

With CDK, programmers can use a familiar programming language to describe the deisred cloud resources and synthesize the CloudFormation files. In addition, CDK determines many of the common parameters automatically, which reduces the amount of coding.

The following is an example excerpt of CDK code using Python.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

from aws_cdk import (

core,

aws_ec2 as ec2,

)

class MyFirstEc2(core.Stack):

def __init__(self, scope, name, **kwargs):

super().__init__(scope, name, **kwargs)

vpc = ec2.Vpc(

... # some parameters

)

sg = ec2.SecurityGroup(

... # some parameters

)

host = ec2.Instance(

self, "MyGreatEc2",

instance_type=ec2.InstanceType("t2.micro"),

machine_image=ec2.MachineImage.latest_amazon_linux(),

vpc=vpc,

...

)

This code describes essentially the same thing as the JSON-based CloudFormation shown in the previous section. You can see that CDK code is much shorter and easier to understand than the very complicated CloudFormation file.

The focus of this book is to help you learn AWS concepts and techniques while writing code using CDK. In the later chapters, we will provide various hands-on exercises using CDK. To kick start, in the first hands-on, we will learn how to launch a simple EC2 instance using CDK.

4. Hands-on #1: Launching an EC2 instance

In the first hands-on session, we will create an EC2 instance (virtual server) using CDK, and log in to the server using SSH. After this hands-on, you will be able to set up your own server on AWS and run calculations as you wish!

4.1. Preparation

The source code for the hands-on is available on GitHub at handson/ec2-get-started.

|

This hands-on exercise can be performed within the free EC2 tier. |

First, we set up the environment for the exercise. This is a prerequisite for the hands-on sessions in later chapters as well, so make sure to do it now without mistakes.

-

AWS account: You will need a personal AWS account to run the hands-on. See Section 14.1 for obtaining an AWS account.

-

Python and Node.js: Python (3.6 or higher) and Node.js (12.0 or higher) must be installed in order to run this hands-on.

-

AWS CLI: For information on installing the AWS CLI, see Section 14.3. Be sure to set up the authentication key described here.

-

AWS CDK: For information on installing the AWS CDK, see Section 14.4.

-

Downloading the source code: Download the source code of the hands-on program from GitHub using the following command.

$ git clone https://github.com/tomomano/learn-aws-by-coding.gitAlternatively, you can go to https://github.com/tomomano/learn-aws-by-coding and click on the download button in the upper right corner.

Using Docker image for the hands-on exercises

We provide a Docker image with the required programs installed, such as Python, Node.js, and AWS CDK. The source code of the hands-on program has also been included in the image. If you already know how to use Docker, then you can use this image to immediately start the hands-on tutorials without having to install anything else.

See Section 14.8 for more instructions.

4.2. SSH

SSH (secure shell) is a tool to securely access Unix-like remote servers. In this hands-on, we will use SSH to access a virtual server. For readers who are not familiar with SSH, here we give a brief guidance.

All SSH communication is encrypted, so confidential information can be sent and received securely over the Internet. For this hands-on, you need to have an SSH client installed on your local machine to access the remote server. SSH clients come standard on Linux and Mac. For Windows, it is recommended to install WSL to use an SSH client (see [environments]).

The basic usage of the SSH command is shown below.

<host name> is the IP address or DNS hostname of the server to be accessed.

The <user name> is the user name of the server to be connected to.

$ ssh <user name>@<host name>SSH can be authenticated using plain text passwords, but for stronger security, it is strongly recommended that you use Public Key Cryptography authentication, and EC2 only allows access in this way. We do not explain the theory of public key cryptography here. The important point in this hands-on is that the EC2 instance holds the public key, and the client computer (the reader’s local machine) holds the private key. Only the computer with the private key can access the EC2 instance. Conversely, if the private key is leaked, a third party will be able to access the server, so manage the private key with care to ensure that it is never leaked.

The SSH command allows you to specify the private key file to use for login with the -i or --identity_file option.

For example, use the following command.

$ ssh -i Ec2SecretKey.pem <user name>@<host name>4.3. Reading the application source code

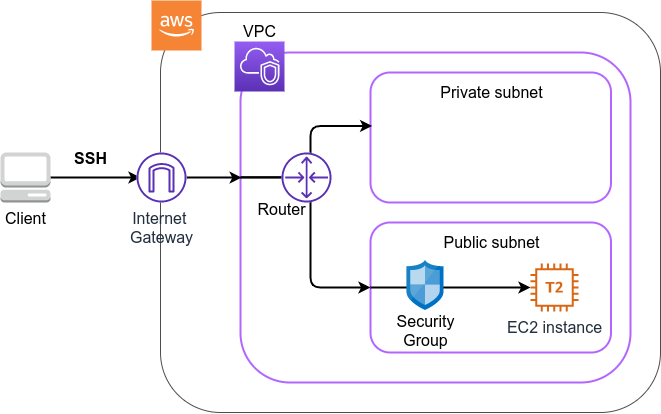

Figure 10 shows an overview of the application we will be deploying in this hands-on.

In this application, we first set up a private virtual network environment using VPC (Virtual Private Cloud). The virtual servers of EC2 (Elastic Compute Cloud) are placed inside the public subnet of the VPC. For security purposes, access to the EC2 instance is restricted by the Security Group (SG). We will use SSH to access the virtual server and perform a simple calculation. We use AWS CDK to construct this application.

Let’s take a look at the source code of the CDK app (handson/ec2-get-started/app.py).

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

class MyFirstEc2(core.Stack):

def __init__(self, scope: core.App, name: str, key_name: str, **kwargs) -> None:

super().__init__(scope, name, **kwargs)

(1)

vpc = ec2.Vpc(

self, "MyFirstEc2-Vpc",

max_azs=1,

cidr="10.10.0.0/23",

subnet_configuration=[

ec2.SubnetConfiguration(

name="public",

subnet_type=ec2.SubnetType.PUBLIC,

)

],

nat_gateways=0,

)

(2)

sg = ec2.SecurityGroup(

self, "MyFirstEc2Vpc-Sg",

vpc=vpc,

allow_all_outbound=True,

)

sg.add_ingress_rule(

peer=ec2.Peer.any_ipv4(),

connection=ec2.Port.tcp(22),

)

(3)

host = ec2.Instance(

self, "MyFirstEc2Instance",

instance_type=ec2.InstanceType("t2.micro"),

machine_image=ec2.MachineImage.latest_amazon_linux(),

vpc=vpc,

vpc_subnets=ec2.SubnetSelection(subnet_type=ec2.SubnetType.PUBLIC),

security_group=sg,

key_name=key_name

)

| 1 | First, we define the VPC. |

| 2 | Next, we define the security group. Here, connections from any IPv4 address to port 22 (used for SSH connections) are allowed. All other connections are rejected. |

| 3 | Finally, an EC2 instance is created with the VPC and SG created above.

The instance type is selected as t2.micro, and Amazon Linux is used as the OS. |

Let us explain each of these points in more detail.

4.3.1. VPC (Virtual Private Cloud)

VPC is a tool for building a private virtual network environment on AWS. In order to build advanced computing systems, it is necessary to connect multiple servers, which requires management of the network addresses. VPC is useful for such purposes.

In this hands-on, only one server is launched, so the benefits of VPC may not be clear to you. However, since AWS specification require that EC2 instances must be placed inside a VPC, we have configured a minimal VPC in this application.

|

For those who are interested, here is a more advanced explanation of the VPC code.

|

4.3.2. Security Group

A security group (SG) is a virtual firewall that can be assigned to an EC2 instance. For example, you can allow or deny connections coming from a specific IP address (inbound traffic restriction), and prohibit access to a specific IP address (outbound traffic restriction).

Let’s look at the corresponding part of the code.

1

2

3

4

5

6

7

8

9

sg = ec2.SecurityGroup(

self, "MyFirstEc2Vpc-Sg",

vpc=vpc,

allow_all_outbound=True,

)

sg.add_ingress_rule(

peer=ec2.Peer.any_ipv4(),

connection=ec2.Port.tcp(22),

)

Here, in order to allow SSH connections from the outside, we specified sg.add_ingress_rule(peer=ec2.Peer.any_ipv4(), connection=ec2.Port.tcp(22)), which means that access to port 22 is allowed from all IPv4 addresses.

In addition, the parameter allow_all_outbound=True is set so that the instance can access the Internet freely to download resources.

|

SSH by default uses port 22 for remote access. |

|

From a security purpose, it is preferable to allow SSH connections only from specific locations such as home, university, or workplace. |

4.3.3. EC2 (Elastic Compute Cloud)

EC2 is a service for setting up virtual servers on AWS. Each virtual server in a running state is called an instance. (However, in colloquial communication, the terms server and instance are often used interchangeably.)

EC2 provides a variety of instance types to suit many use cases. Table 2 lists some representative instance types. A complete list of EC2 instance types can be found at Official Documentation "Amazon EC2 Instance Types".

| Instance | vCPU | Memory (GiB) | Network bandwidth (Gbps) | Price per hour ($) |

|---|---|---|---|---|

t2.micro |

1 |

1 |

- |

0.0116 |

t2.small |

1 |

2 |

- |

0.023 |

t2.medium |

2 |

4 |

- |

0.0464 |

c5.24xlarge |

96 |

192 |

25 |

4.08 |

c5n.18xlarge |

72 |

192 |

100 |

3.888 |

x1e.16xlarge |

64 |

1952 |

10 |

13.344 |

As can be seen in Table 2, the virtual CPUs (vCPUs) can be configured from 1 to 96 cores, memory from 1GB to over 2TB, and network bandwidth up to 100Gbps.

The price per hour increases approximately linearly with the number of vCPUs and memories allocated.

EC2 keeps track of the server running time in seconds, and the usage fee is determined in proportion to the usage time.

For example, if an instance of t2.medium is launched for 10 hours, a fee of 0.0464 * 10 = $0.464 will be charged.

|

AWS has a

free EC2 tier.

With this, |

|

The price listed in Table 2 is for the |

|

The above price of $0.0116 / hour for t2.micro is for the on-demand instance type. In addition to on-demand instance type, there is another type of instance called spot instance. The idea of spot instances is to rent out the excess free CPUs temporarily available at AWS data center to users at a discount. Therefore, spot instances are offered at a much lower price, but the instance may be forcibly shut down when the load on the AWS data center increases, even if the user’s program is still running. There have been many reports of spot instance being used to reduce costs in applications such as scientific computing and web servers. |

Let’s take a look at the part of the code that defines the EC2 instance.

1

2

3

4

5

6

7

8

9

host = ec2.Instance(

self, "MyFirstEc2Instance",

instance_type=ec2.InstanceType("t2.micro"),

machine_image=ec2.MachineImage.latest_amazon_linux(),

vpc=vpc,

vpc_subnets=ec2.SubnetSelection(subnet_type=ec2.SubnetType.PUBLIC),

security_group=sg,

key_name=key_name

)

Here, we have selected the instance type t2.micro.

In addition, the machine_image is set to

Amazon Linux

(Machine image is a concept similar to OS.

We will discuss machine image in more detail in Section 6.)

In addition, the VPC and SG defined above are assigned to this instance.

This is a brief explanation of the program we will be using. Although it is a minimalist program, we hope it has given you an idea of the steps required to create a virtual server.

4.4. Deploying the application

Now that we understand the source code, let’s deploy the application on AWS. Again, it is assumed that you have finished the preparations described inSection 4.1.

4.4.1. Installing Python dependencies

The first step is to install the Python dependency libraries. In the following, we use venv as a tool to manage Python libraries.

First, let’s move to the directory handson/ec2-get-started.

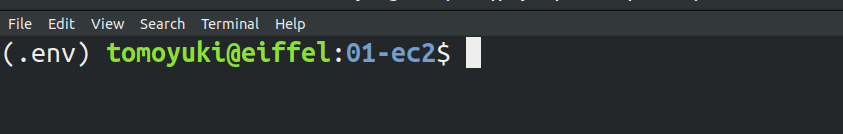

$ cd handson/ec2-get-startedAfter moving the directory, create a new virtual environment with venv and run the installation with pip.

$ python3 -m venv .env

$ source .env/bin/activate

$ pip install -r requirements.txtThis completes the Python environment setup.

|

A quick tutorial on |

4.4.2. Setting AWS access key

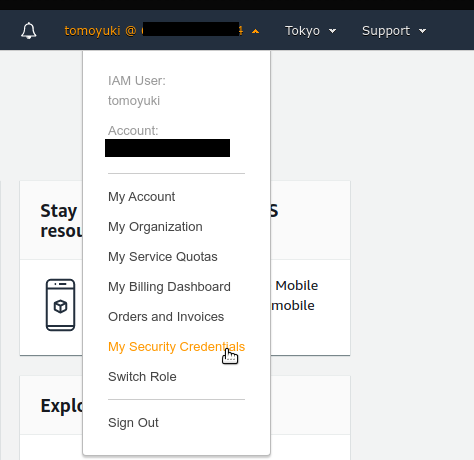

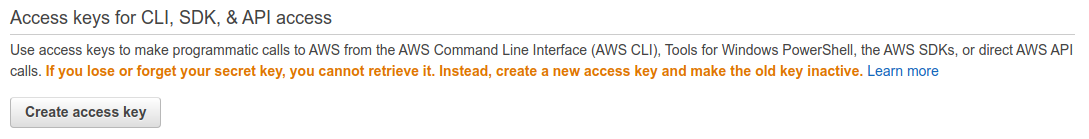

To use the AWS CLI and AWS CDK, you need to have an AWS access key set up. Refer to Section 14.2 for issuing a access key. After issuing the access key, refer to Section 14.3 to configure the command line settings.

To summarize the procedure shortly, the first method is to set environment variables such as AWS_ACCESS_KEY_ID.

The second method is to store the authentication information in ~/.aws/credentials.

Setting an access key is a common step in using the AWS CLI/CDK, so make sure you understand it well.

4.4.3. Generating a SSH key pair

We login to the EC2 instance using SSH. Before creating an EC2 instance, you need to prepare an SSH public/private key pair to be used exclusively in this hands-on exercise.

Using the following AWS CLI command, let’s generate a key named OpenSesame.

$ export KEY_NAME="OpenSesame"

$ aws ec2 create-key-pair --key-name ${KEY_NAME} --query 'KeyMaterial' --output text > ${KEY_NAME}.pemWhen you execute this command, a file named OpenSesame.pem will be created in the current directory.

This is the private key to access the server.

To use this key with SSH, move the key to the directory ~/.ssh/.

To prevent the private key from being overwritten or viewed by a third party, you must set the access permission of the file to 400.

$ mv OpenSesame.pem ~/.ssh/

$ chmod 400 ~/.ssh/OpenSesame.pem4.4.4. Deploy

We are now ready to deploy our EC2 instance!

Use the following command to deploy the application on AWS.

The option -c key_name="OpenSesame" specifies to use the key named OpenSesame that we generated earlier.

$ cdk deploy -c key_name="OpenSesame"When this command is executed, the VPC, EC2, and other resources will be deployed on AWS.

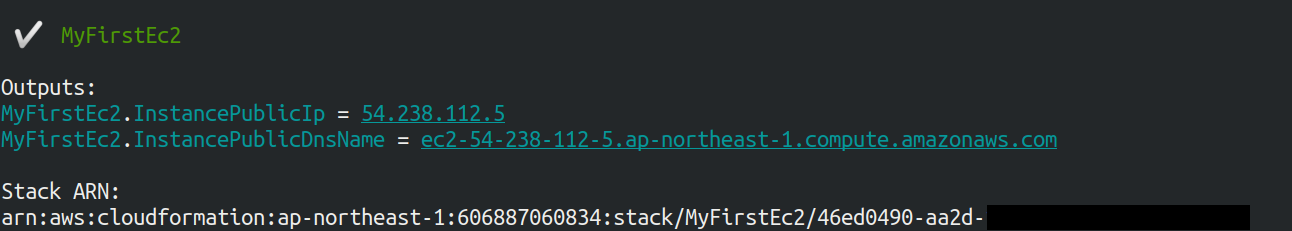

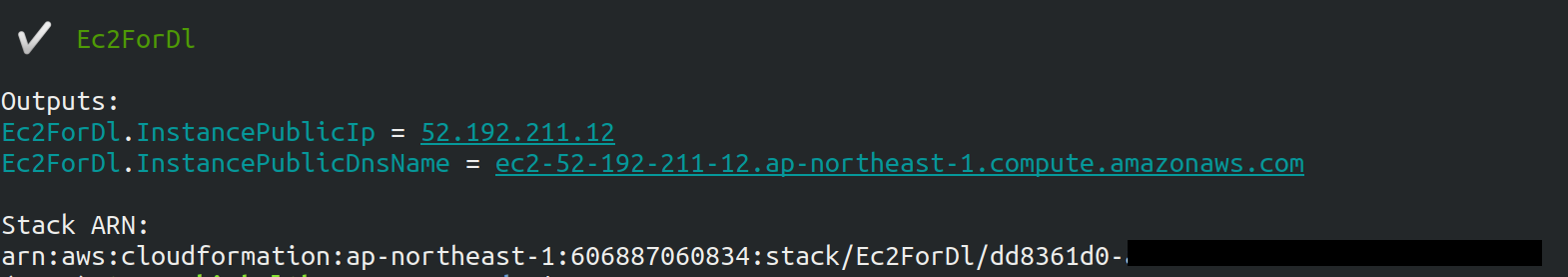

At the end of the command output, you should get an output like Figure 11.

In the output, the digits following InstancePublicIp is the public IP address of the launched instance.

The IP address is randomly assigned for each deployment.

4.4.5. Log in with SSH

Let us log in to the instance using SSH.

$ ssh -i ~/.ssh/OpenSesame.pem ec2-user@<IP address>Note that the -i option specifies the private key that was generated earlier.

Since the EC2 instance by default has a user named ec2-user, use this as a login user name.

Lastly, replace <IP address> with the IP address of the EC2 instance you created (e.g., 12.345.678.9).

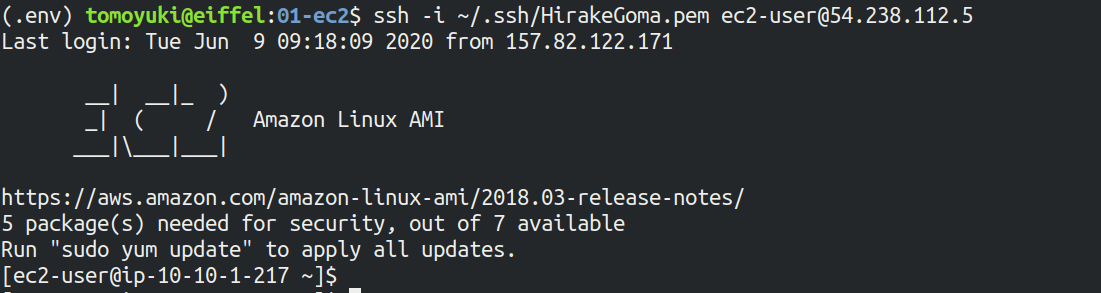

If the login is successful, you will be taken to a terminal window like Figure 12.

Since you are logging in to a remote server, make sure the prompt looks like [ec2-user@ip-10-10-1-217 ~]$.

Congratulations! You have successfully launched an EC2 virtual instance on AWS, and you can access it remotely!

4.4.6. Exploring the launched EC2 instance

Now that we have a new instance up and running, let’s play with it.

Inside the EC2 instance you logged into, run the following command. The command will output the CPU information.

$ cat /proc/cpuinfo

processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 63

model name : Intel(R) Xeon(R) CPU E5-2676 v3 @ 2.40GHz

stepping : 2

microcode : 0x43

cpu MHz : 2400.096

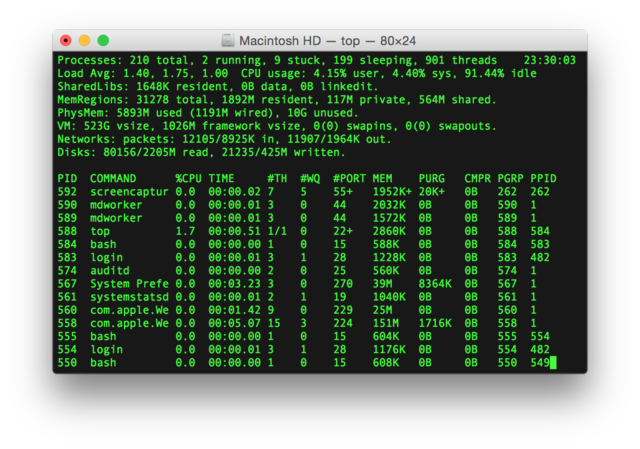

cache size : 30720 KBNext, let’s use top command and show the running processes and memory usage.

$ top -n 1

top - 09:29:19 up 43 min, 1 user, load average: 0.00, 0.00, 0.00

Tasks: 76 total, 1 running, 51 sleeping, 0 stopped, 0 zombie

Cpu(s): 0.3%us, 0.3%sy, 0.1%ni, 98.9%id, 0.2%wa, 0.0%hi, 0.0%si, 0.2%st

Mem: 1009140k total, 270760k used, 738380k free, 14340k buffers

Swap: 0k total, 0k used, 0k free, 185856k cached

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

1 root 20 0 19696 2596 2268 S 0.0 0.3 0:01.21 init

2 root 20 0 0 0 0 S 0.0 0.0 0:00.00 kthreadd

3 root 20 0 0 0 0 I 0.0 0.0 0:00.00 kworker/0:0Since we are using t2.micro instance, we have 1009140k = 1GB memory in the virtual instance.

The instance we started has Python 2 installed, but not Python 3. Let’s install Python 3.6. The installation is easy.

$ sudo yum update -y

$ sudo yum install -y python36Let’s start Python 3 interpreter.

$ python3

Python 3.6.10 (default, Feb 10 2020, 19:55:14)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-28)] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>>To exit from the interpreter, use Ctrl + D or type exit().

So, that’s it for playing around on the server (if you’re interested, you can try different things!). Log out from the instance with the following command.

$ exit4.4.7. Observing the resources from AWS console

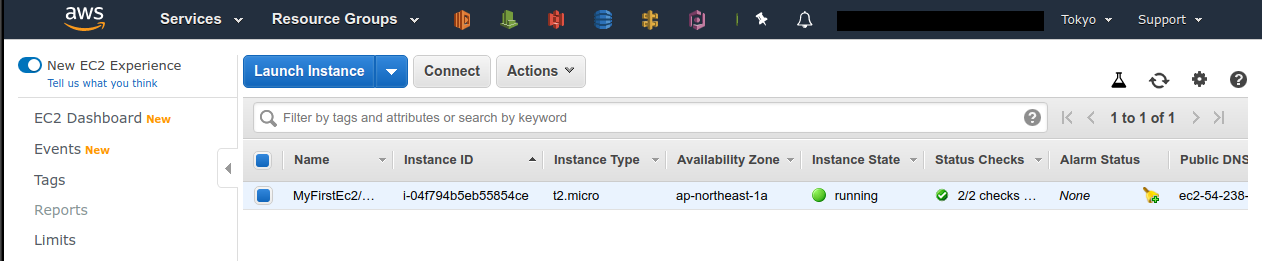

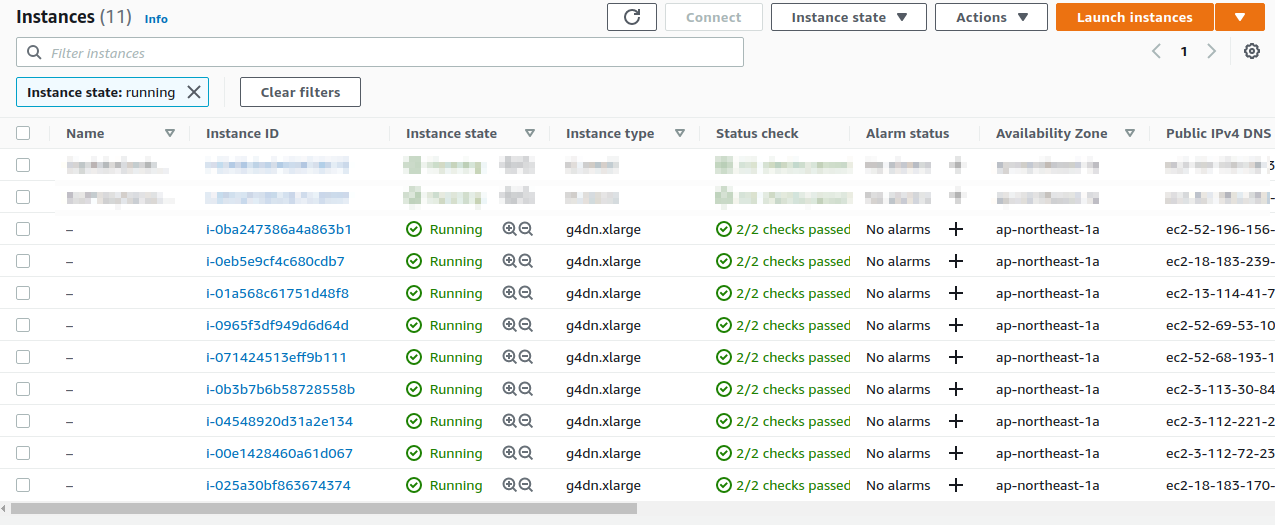

So far we have performed all EC2-related operations from the command line. Operations such as checking the status of an EC2 instance or shutting down a server can also be performed from the AWS console. Let’s take a quick look at this.

First, open a web browser and log in to the AWS console.

Once you are logged in, search EC2 from Services and go to the EC2 dashboard.

Next, navigate to Instances in the left sidebar.

You should get a screen like Figure 13.

On this screen, you can check the instances under your account.

Similarly, you can also check the VPC and SG from the console.

|

Make sure that the correct region (in this case, |

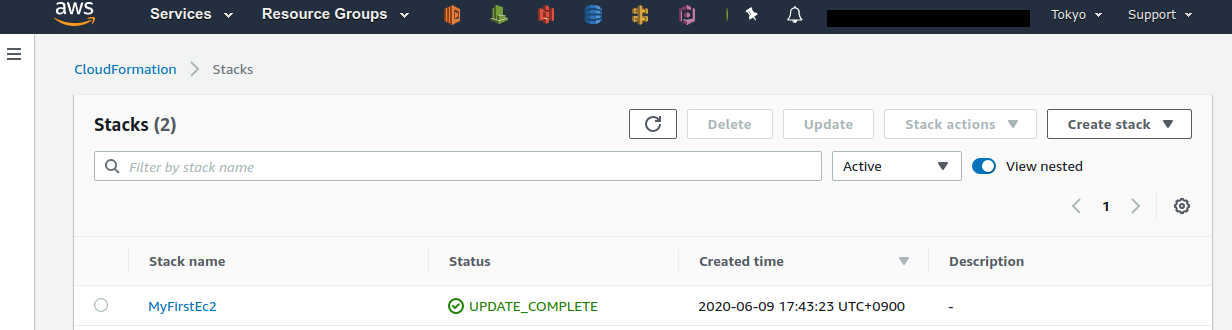

As mentioned in the previous chapter, the application deployed here is managed as a CloudFormation stack.

A stack refers to a group of AWS resources.

In this case, VPC, SG, and EC2 are included in the same stack.

From the AWS console, let’s go to the CloudFormation dashboard (Figure 14).

You should be able find a stack named "MyFirstEc2". If you click on it and look at the contents, you will see that EC2, VPC, and other resources are associated to this stack.

4.4.8. Deleting the stack

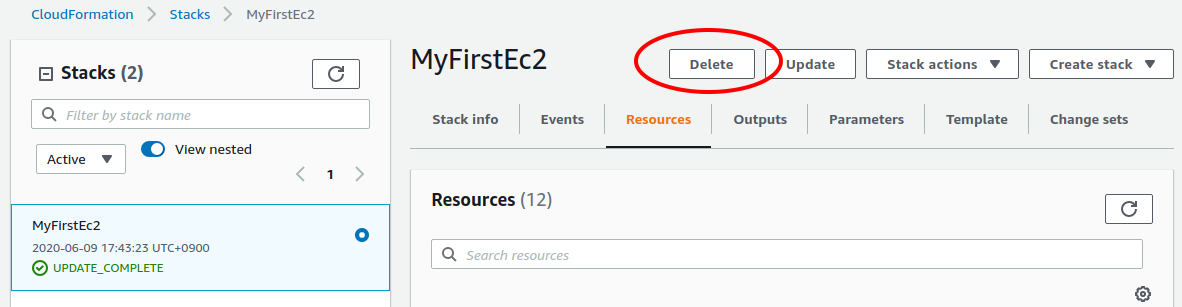

We have explained everything that was to be covered in the first hands-on session. Finally, we must delete the stack that is no longer in use. There are two ways to delete a stack.

The first method is to press the "Delete" button on the Cloudformation dashboard (Figure 15).

Then, the status of the stack will change to "DELETE_IN_PROGRESS", and when the deletion is completed, the stack will disappear from the list of CloudFormation stacks.

The second method is to use the command line. Let’s go back to the command line where we ran the deployment. Then, execute the following command.

$ cdk destroyWhen you execute this command, the stack will be deleted. After deleting the stack, make sure for yourself that all the VPCs, EC2s, etc. have disappeared without a trace. Using CloudFormation is very convenient because it allows you to manage and delete all related AWS resources at once.

|

Make sure you delete your own stack! If you do not do so, you will continue to be charged for the EC2 instance! |

Also, delete the SSH key pair created for this hands-on, as it is no longer needed. First, delete the public key registered on the EC2 side. This can be done in two ways: from the console or from the command line.

To do this from the console, go to the EC2 dashboard and select Key Pairs from the left sidebar.

When a list of keys is displayed, check the key labeled OpenSesame and execute Delete from Actions in the upper right corner of the screen (Figure 16).

To do the same operation from the command line, use the following command:

$ aws ec2 delete-key-pair --key-name "OpenSesame"Lastly, delete the key from your local machine.

$ rm -f ~/.ssh/OpenSesame.pemNow, we’re all done cleaning up the cloud.

|

If you frequently start EC2 instances, you do not need to delete the SSH key every time. |

4.5. Summary

This is the end of the first part of the book. We hope you have been able to follow the contents without much trouble.

In Section 2, the definition of cloud and important terminology were explained, and then the reasons for using cloud were discussed. Then, in Section 3, AWS was introduced as a platform to learn about cloud computing, and the minimum knowledge and terminology required to use AWS were explained. In the hands-on session in Section 4, we used AWS CLI and AWS CDK to set up our own private server on AWS.

You can now experience how easy it is to start up and remove virtual servers (with just a few commands!). We mentioned in Section 2 that the most important aspect of the cloud is the ability to dynamically expand and shrink computational resources. We hope that the meaning of this phrase has become clearer through the hands-on experience. Using this simple tutorial as a template, you can customize the code for your own appplications, such as creating a virtual server to host your web pages, prepare an EC2 instance with a large number of cores to run scientific computations, and many more.

In the next chapter, you will experience solving more realistic problems based on the cloud technology you have learned. Stay tuned!

5. Scientific computing and machine learning in the cloud

In the modern age of computing, computational simulation and big data analysis are the major driving force of scientific and engineering research. The cloud is the best place to perform these large-scale computations. In Part II, which starts with this section, you will experience how to run scientific computation on the cloud through several hands-on experiences. As a specific subject of scientific computing, here we will focus on machine learning (deep learning).

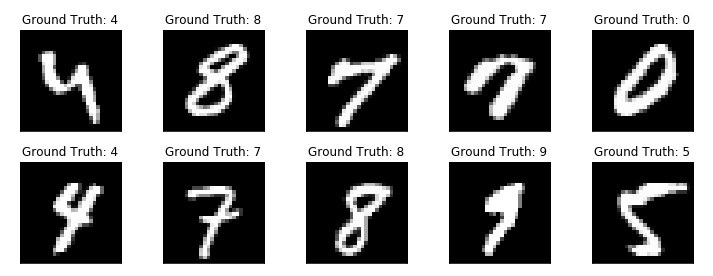

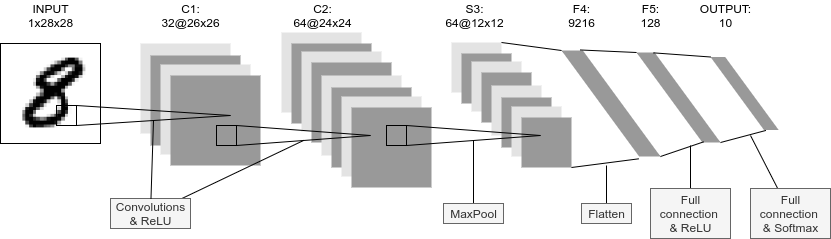

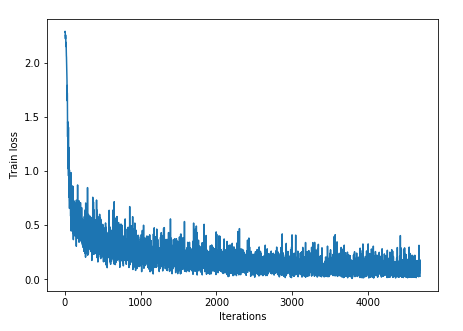

In this book, we will use the PyTorch library to implement deep learning algorithms, but no knowledge of deep learning or PyTorch is required. The lecture focuses on why and how to run deep learning in the cloud, so we will not go into the details of the deep learning algorithm itself. Interested readers are refered to other books for the theory and implementation of deep neural network (column below).

5.1. Why use the cloud for machine learning?

The third AI boom started around 2010, and consequently machine learning is attracting a lot of attention not only in academic research but also in social and business contexts. In particular, algorithms based on multi-layered neural networks, known as deep learning, have revolutionized image recognition and natural language processing by achieving remarkably higher performance than previous algorithms.

The core feature of deep learning is its large number of parameters. As the layers become deeper, the number of weight parameters connecting the neurons between the layers increases. For example, the latest language model, GPT-3, contains as many as 175 billion parameters. With such a vast number of parameters, deep learning can achieve high expressive power and generalization performance.

Not only GPT-3, but also recent neural networks that achieve SOTA (State-of-the-Art) performance frequently contain parameters in the order of millions or billions. Naturally, training such a huge neural network is computationally expensive. As a result, it is not uncommon to see cases where the training takes more than a full day with a single workstation. With the rapid development of deep learning, the key to maximize research and business productivity is how to optimize the neural network with high throughput. The cloud is a very effective means to solve such problems! As we have seen in Section 4, the cloud can be used to dynamically launch a large number of instances, and execute computations in parallel. In addition, there are specially designed chips (e.g. GPUs) optimized for deep learning operations to accelerate the computation. By using the cloud, you gain access to inexhaustible supply of such specialized computing chips. In fact, it was reported that the training of GPT-3 was performed using Microsoft’s cloud, although the details have not been disclosed.

|

The details of the computational resources used in GPT-3 project are not disclosed in the paper, but there is an interesting discussion at Lambda’s blog (Lambda is a cloud service specializing in machine learning). According to the article, it would take 342 years and $4.6 million in cloud fees to train 175 billion parameters if a single GPU (NVIDIA V100) was used. The GPT-3 team was able to complete the training in a realistic amount of time by distributing the processing across multiple GPUs, but it is clear that this level of modeling can only be achieved by pushing the limits of cloud technology. |

5.2. Accelerating deep learning by GPU

Here we will briefly talk about Graphics Processing Unit or GPU, which serves as an indispensable technology for deep learning.

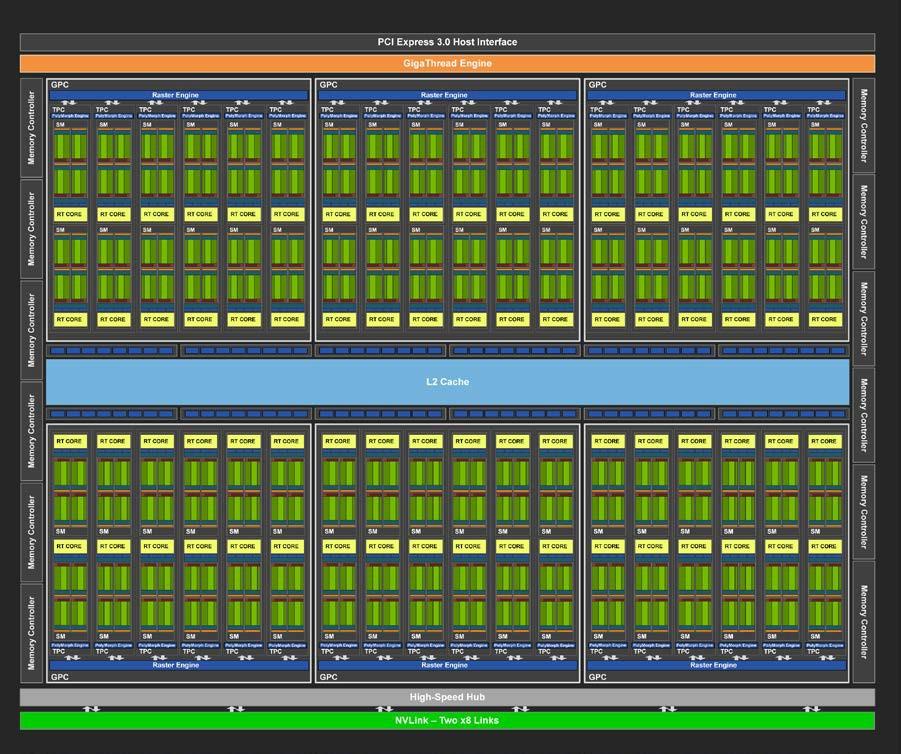

As the name suggests, a GPU is originally a dedicated computing chip for producing computer graphics. In contrast to a CPU (Central Processing Unit) which is capable of general computation, a GPU is designed specifically for graphics operations. It can be found in familiar game consoles such as XBox and PS5, as well as in high-end notebook and desktop computers. In computer graphics, millions of pixels arranged on a screen need to be updated at video rates (30 fps) or higher. To handle this task, a single GPU chip contain hundreds to thousands of cores, each with relatively small computing power (Figure 17), and processes the pixels on the screen in parallel to achieve real-time rendering.

Although GPUs were originally developed for the purpose of computer graphics, since around 2010, some advanced programmers and engineers started to use GPU’s high parallel computing power for calculations other than graphics, such as scientific computations. This idea is called General-purpose computing on GPU or GPGPU. Due to its chip design, GPGPU is suitable for simple and regular operations such as matrix operations, and can achieve much higher speed than CPUs. Currently, GPGPU is employed in many fields such as molecular dynamics, weather simulation, and machine learning.

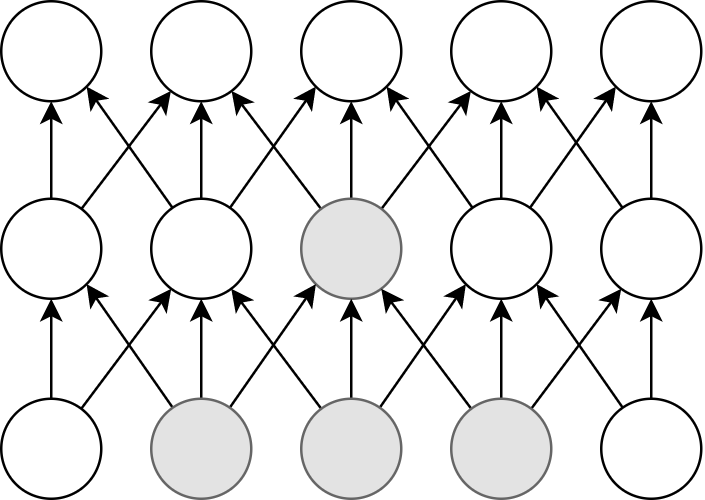

The operation that occurs most frequently in deep learning is the convolution operation, which transfers the output of neurons to the neurons in the next layer (Figure 18). Convolution is exactly the kind of operations that GPUs are good at, and by using GPUs instead of CPUs, learning can be dramatically accelerated, up to several hundred times.

Thus, GPUs are indispensable for machine learning calculations. However, they are quite expensive. For example, NVIDIA’s Tesla V100 chip, designed specifically for scientific computing and machine learning, is priced at about one million yen (ten thousand dollars). One million yen is quite a large investment just to start a machine learning project. The good news is, if you use the cloud, you can use GPUs with zero initial cost!

To use GPUs in AWS, you need to select an EC2 instance type equipped with GPUs, such as P2, P3, G3, and G4 instance family.

Table 3 lists representative GPU-equipped instance types as of this writing.

| Instance | GPUs | GPU model | GPU Mem (GiB) | vCPU | Mem (GiB) | Price per hour ($) |

|---|---|---|---|---|---|---|

p3.2xlarge |

1 |

NVIDIA V100 |

16 |

8 |

61 |

3.06 |

p3n.16xlarge |

8 |

NVIDIA V100 |

128 |

64 |

488 |

24.48 |

p2.xlarge |

1 |

NVIDIA K80 |

12 |

4 |

61 |

0.9 |

g4dn.xlarge |

1 |

NVIDIA T4 |

16 |

4 |

16 |

0.526 |

As you can see from Table 3, the price of GPU instances is higher than the CPU-only instances. Also note that older generation GPUs (K80 compared to V100) are offered at a lower price. The number of GPUs per instance can be selected from one to a maximum of eight.

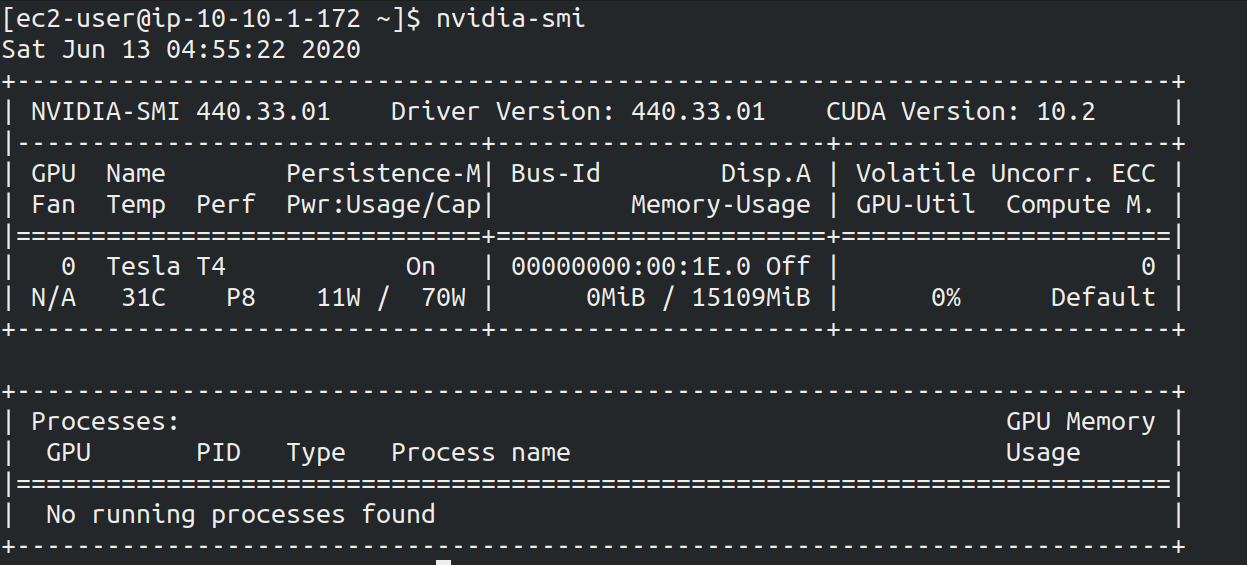

The cheapest GPU instance type is g4dn.xlarge, which is equipped with a low-cost and energy-efficient NVIDIA T4 chip.

In the hands-on session in the later chapters, we will use this instance to perform deep learning calculations.

|

The prices in Table 3 are for |

|

The cost for |

6. Hands-on #2: Running Deep Learning on AWS

6.1. Preparation

In the second hands-on session, we will launch an EC2 instance equipped with a GPU and practice training and inference of a deep learning model.

The source code for the hands-on is available on GitHub at handson/mnist.

To run this hands-on, it is assumed that the preparations described in the first hands-on (Section 4.1) have been completed. There are no other preparations required.

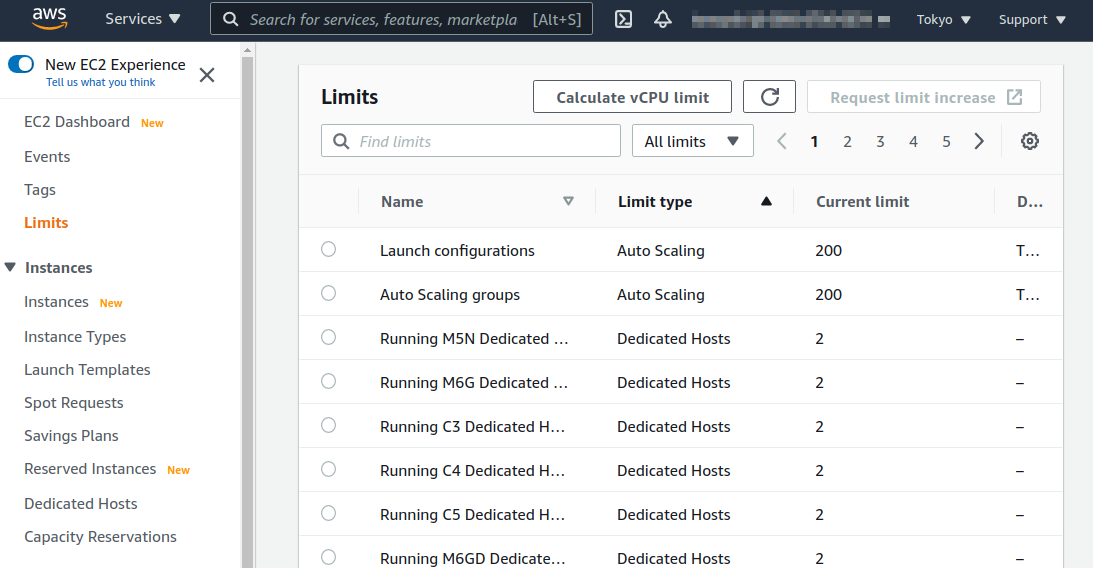

|

In the initial state of your AWS account, the launch limit for G-type instances may be set to 0.

To check this, open the EC2 dashbord from the AWS console, and select If it is set to 0, you need to send a request to increase the limit via the request form. For details, see official documentation "Amazon EC2 service quotas". |

|

This hands-on uses a |

6.2. Reading the application source code

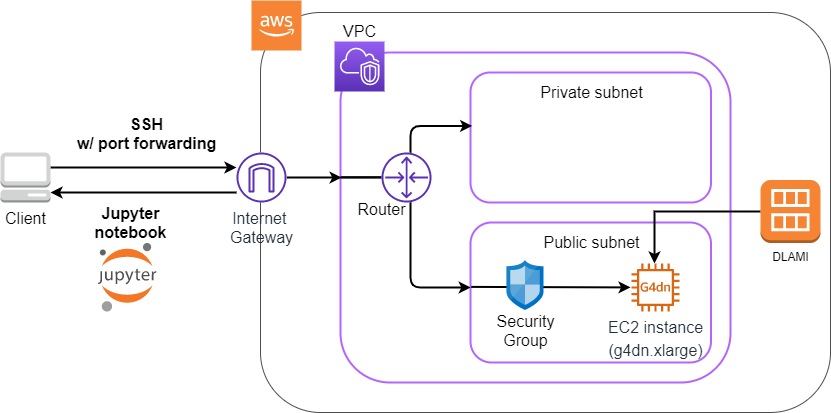

Figure 19 shows an overview of the application we will be deploying in this hands-on.

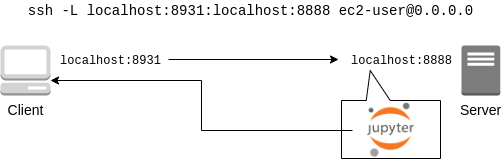

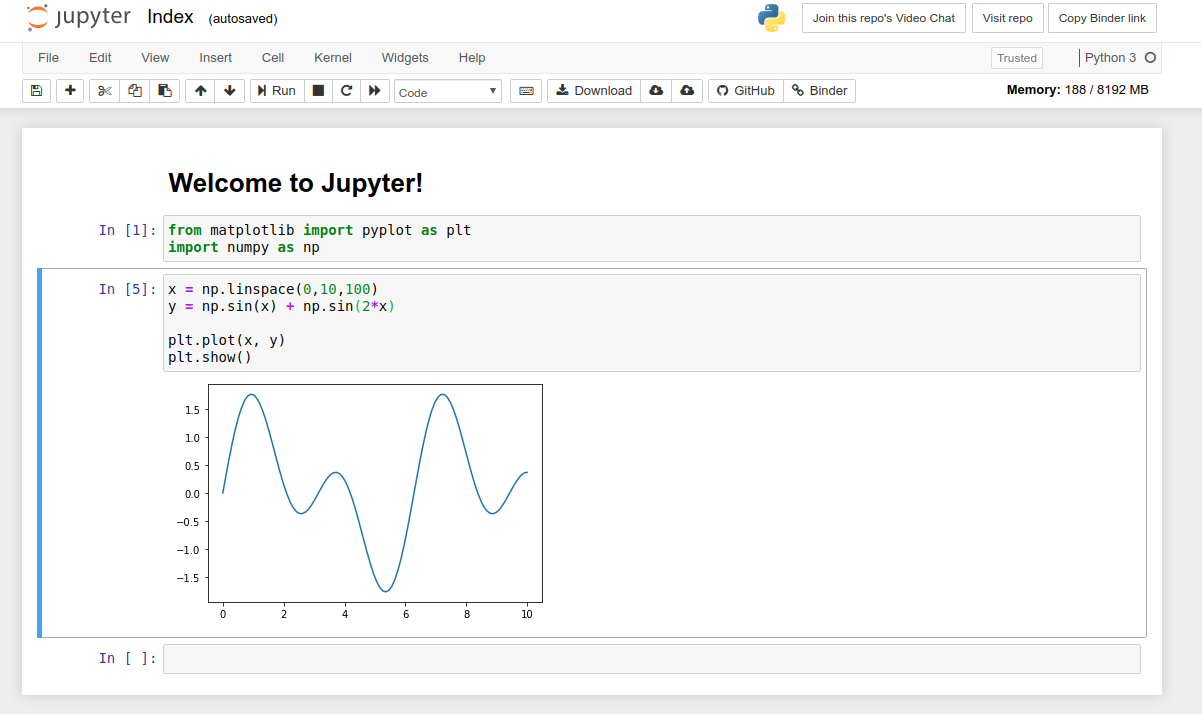

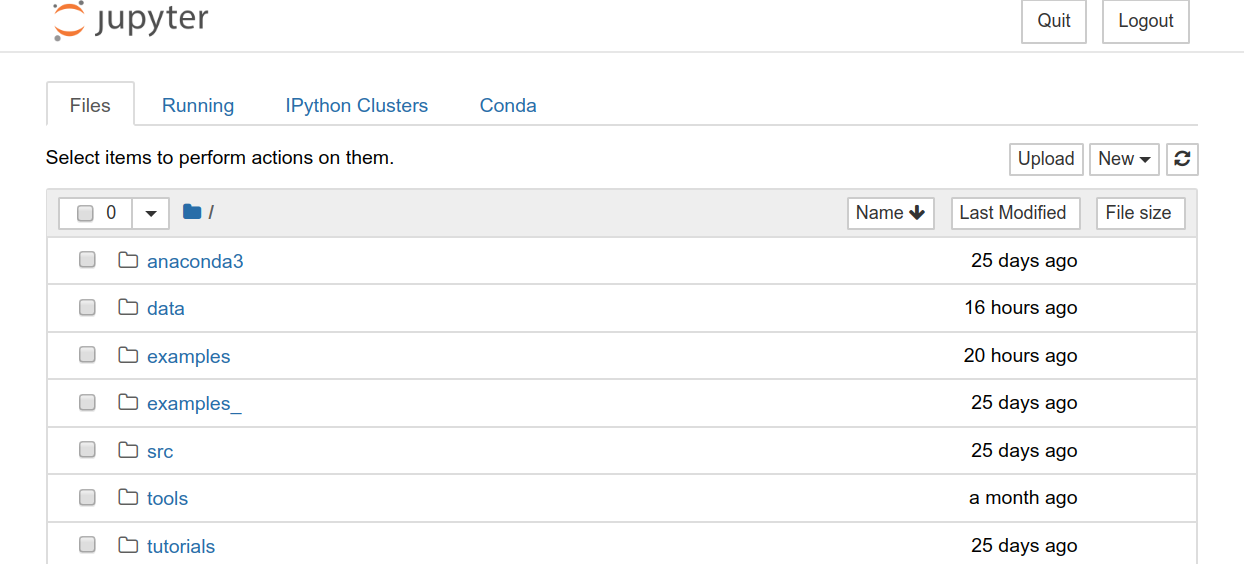

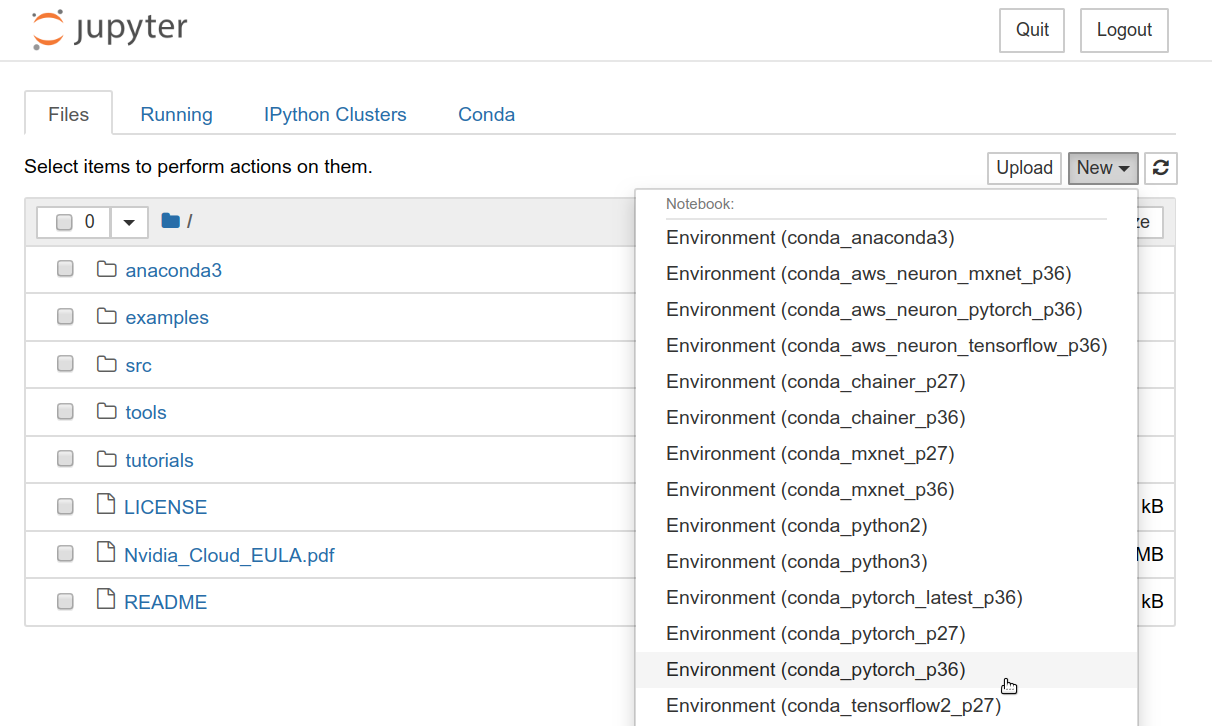

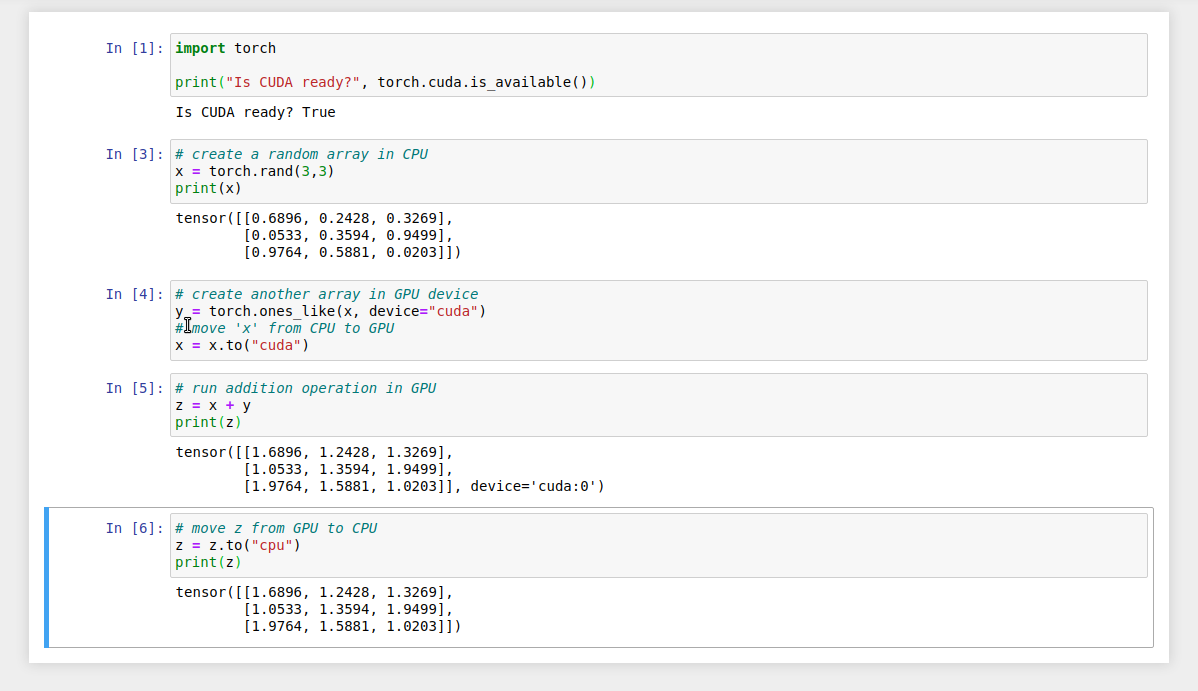

You will notice that many parts of the figure are the same as the application we created in the first hands-on session (Figure 10). With a few changes, we can easily build an environment to run deep learning! The three main changes are as follows.

-

Use a

g4dn.xlargeinstance type equipped with a GPU. -

Use a DLAMI (see below) with the programs for deep learning pre-installed.

-

Connect to the server using SSH with port forwarding option, and write and execute codes using Jupyter Notebook (see below) running on the server.

Let’s have a look at the source code (handson/mnist/app.py). The code is almost the same as in the first hands-on. We will explain only the parts where changes were made.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

class Ec2ForDl(core.Stack):

def __init__(self, scope: core.App, name: str, key_name: str, **kwargs) -> None:

super().__init__(scope, name, **kwargs)

vpc = ec2.Vpc(

self, "Ec2ForDl-Vpc",

max_azs=1,

cidr="10.10.0.0/23",

subnet_configuration=[

ec2.SubnetConfiguration(

name="public",

subnet_type=ec2.SubnetType.PUBLIC,

)

],

nat_gateways=0,

)

sg = ec2.SecurityGroup(

self, "Ec2ForDl-Sg",

vpc=vpc,

allow_all_outbound=True,

)

sg.add_ingress_rule(

peer=ec2.Peer.any_ipv4(),

connection=ec2.Port.tcp(22),

)

host = ec2.Instance(

self, "Ec2ForDl-Instance",

instance_type=ec2.InstanceType("g4dn.xlarge"), (1)

machine_image=ec2.MachineImage.generic_linux({

"us-east-1": "ami-060f07284bb6f9faf",

"ap-northeast-1": "ami-09c0c16fc46a29ed9"

}), (2)

vpc=vpc,

vpc_subnets=ec2.SubnetSelection(subnet_type=ec2.SubnetType.PUBLIC),

security_group=sg,

key_name=key_name

)

| 1 | Here, we have selected the g4dn.xlarge instance type (in the first hands-on, it was t2.micro).

As already mentioned in Section 5, the g4dn.xlarge is an instance with a low-cost model GPU called NVIDIA T4.

It has 4 CPU cores and 16GB of main memory. |

| 2 | Here, we are using

Deep Learning Amazon Machine Image; DLAMI,

an AMI with varisous programs for deep learning pre-installed.

Note that in the first hands-on, we used an AMI called Amazon Linux.

The ID of the AMI must be specified for each region, and here we are supplying IDs for us-east-1 and ap-northeast-1. |

|

In the code above, the AMI IDs are only defined in |

6.2.1. DLAMI (Deep Learning Amazon Machine Image)

AMI (Amazon Machine Image) is a concept that roughly corresponds to an OS (Operating System). Naturally, a computer cannot do anything without an OS, so it is necessary to "install" some kind of OS whenever you start an EC2 instance. The equivalent of the OS that is loaded in EC2 instance is the AMI. For example, you can choose Ubuntu AMI to launch your EC2 instance. As alternative options, you can select Windows Server AMI or Amazon Linux AMI, which is optimized for use with EC2.

However, it is an oversimplification to understand AMI as just an OS. AMI can be the base (empty) OS, but AMI can also be an OS with custom programs already installed. If you can find an AMI that has the necessary programs installed, you can save a lot of time and effort in installing and configuring the environment yourself. To give a concrete example, in the first hands-on session, we showed an example of installing Python 3.6 on an EC2 instance, but doing such an operation every time the instance is launched is tedious!

In addition to the official AWS AMIs, there are also AMIs provided by third parties. It is also possible to create and register your own AMI (see official documentation). You can search for AMIs from the EC2 dashboard. Alternatively, you can use the AWS CLI to obtain a list with the following command (also see official documentation).

$ aws ec2 describe-images --owners amazonDLAMI (Deep Learning AMI)

is an AMI pre-packaged with deep learning tools and programs.

DLAMI comes with popular deep learning frameworks and libraries such as TensorFlow and PyTorch, so you can run deep learning applications immediately after launching an EC2 instance.

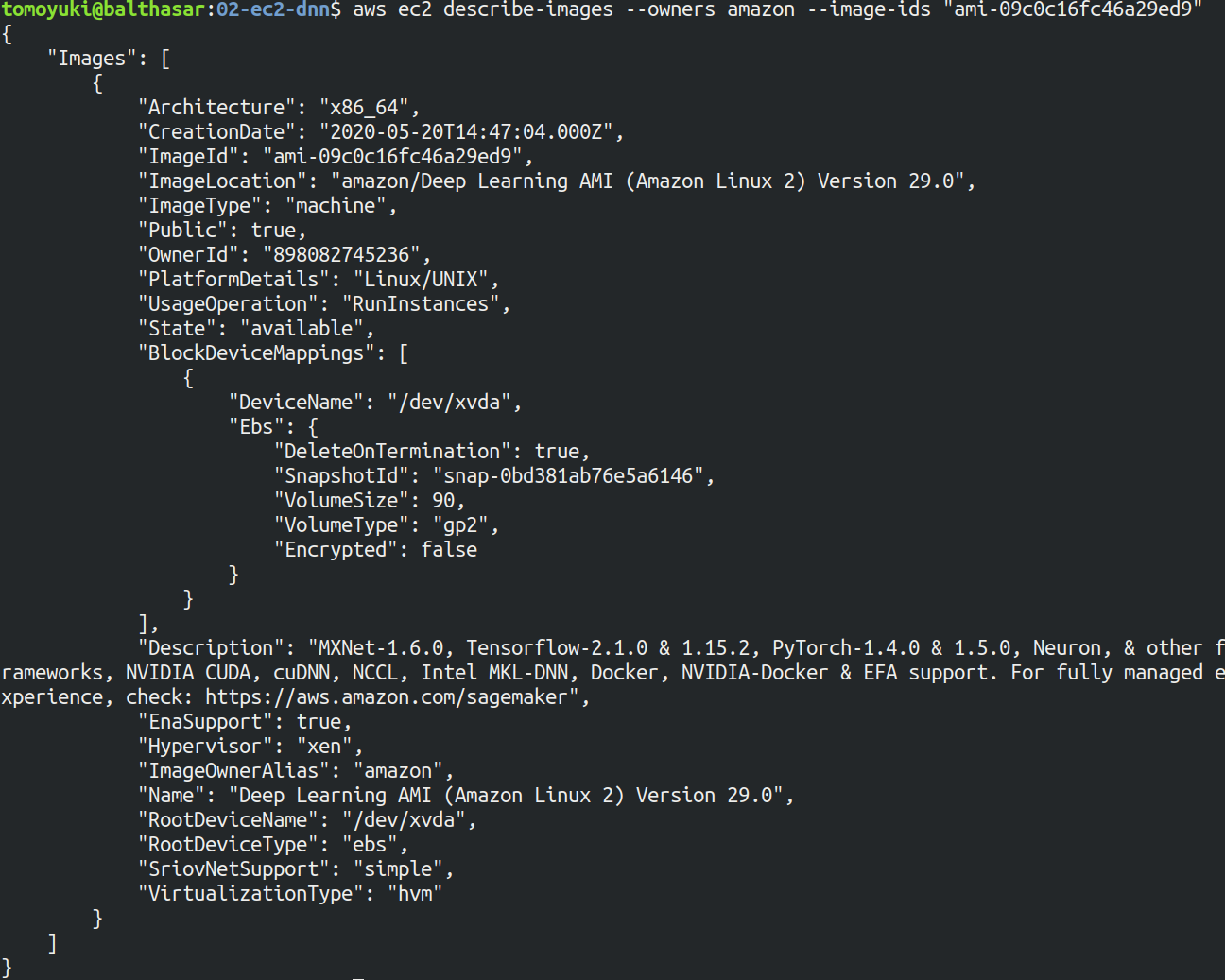

In this hands-on, we will use a DLAMI based on Amazon Linux 2 (AMI ID = ami-09c0c16fc46a29ed9). Let’s use the AWS CLI to get the details of this AMI.

$ aws ec2 describe-images --owners amazon --image-ids "ami-09c0c16fc46a29ed9"

You should get an output like Figure 20. From the output, we can see that the DLAMI has PyTorch versions 1.4.0 and 1.5.0 installed.

|

What exactly is installed in DLAMI? For the interested readers, here is a brief explanation (Reference: official documentation "What Is the AWS Deep Learning AMI?"). At the lowest level, the GPU driver is installed. Without the GPU driver, the OS cannot exchange commands with the GPU. The next layer is CUDA and cuDNN. CUDA is a language developed by NVIDIA for general-purpose computing on GPUs, and has a syntax that extends the C++ language. cuDNN is a deep learning library written in CUDA, which implements operations such as n-dimensional convolution. This is the content of the "Base" DLAMI. The "Conda" DLAMI has libraries such as |

6.3. Deploying the application

Now that we understand the application source code, let’s deploy it.

The deployment procedure is almost the same as the first hands-on.

Here, only the commands are listed (lines starting with # are comments).

If you have forgotten the meaning of each command, review the first hands-on.

You should not forget to set the access key (Section 14.3).

# move to the project directory

$ cd handson/mnist

# create venv and install dependent libraries

$ python3 -m venv .env

$ source .env/bin/activate

$ pip install -r requirements.txt

# Generate SSH key

$ export KEY_NAME="OpenSesame"

$ aws ec2 create-key-pair --key-name ${KEY_NAME} --query 'KeyMaterial' --output text > ${KEY_NAME}.pem

$ mv OpenSesame.pem ~/.ssh/

$ chmod 400 ~/.ssh/OpenSesame.pem

# Deploy!

$ cdk deploy -c key_name="OpenSesame"|

If you did not delete the SSH key you created in the first hands-on, you do not need to create another SSH key. Conversely, if an SSH with the same name already exists, the key generation command will output an error. |

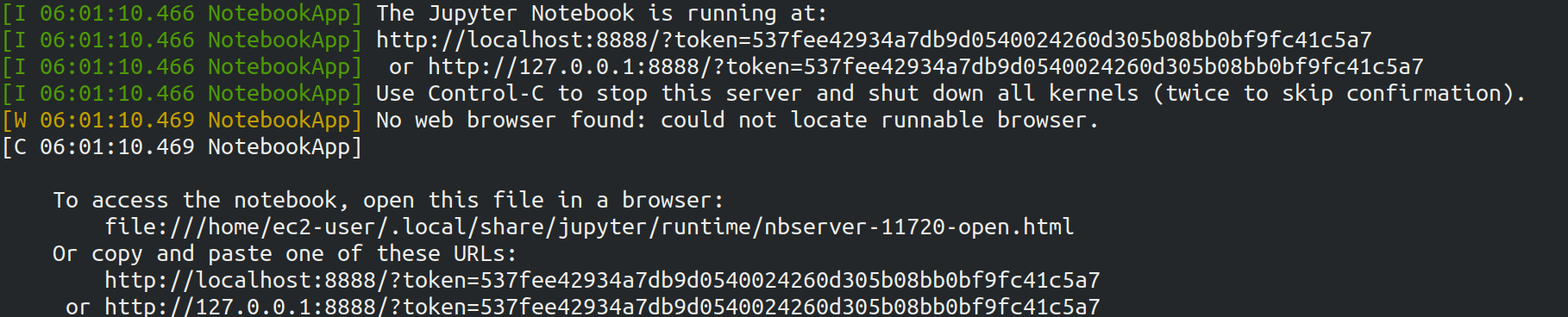

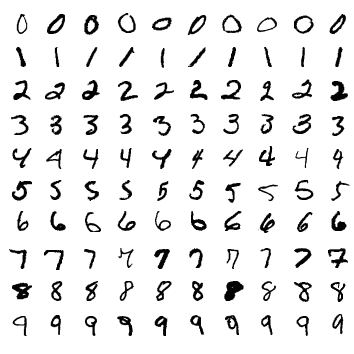

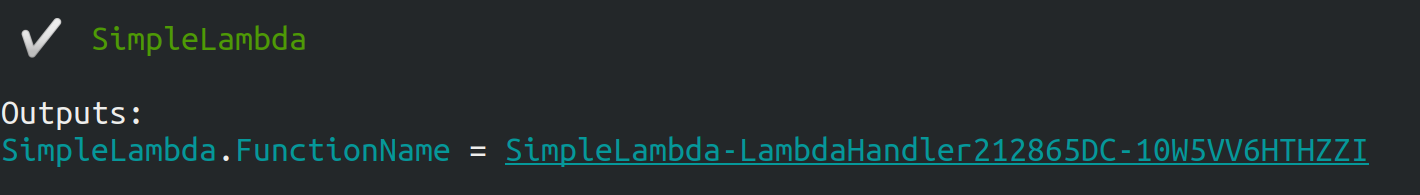

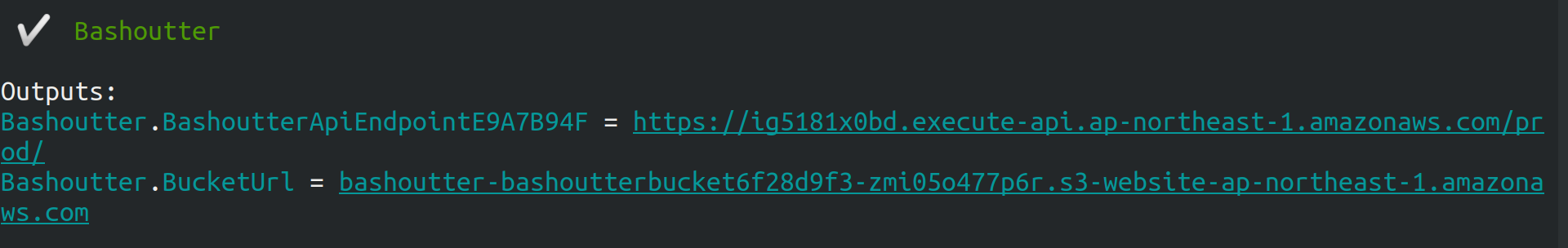

If the deployment is executed successfully, you should get an output like Figure 21.

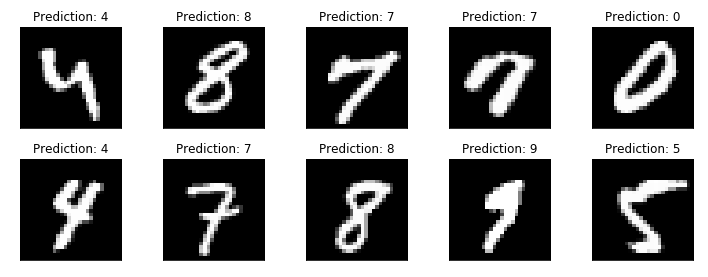

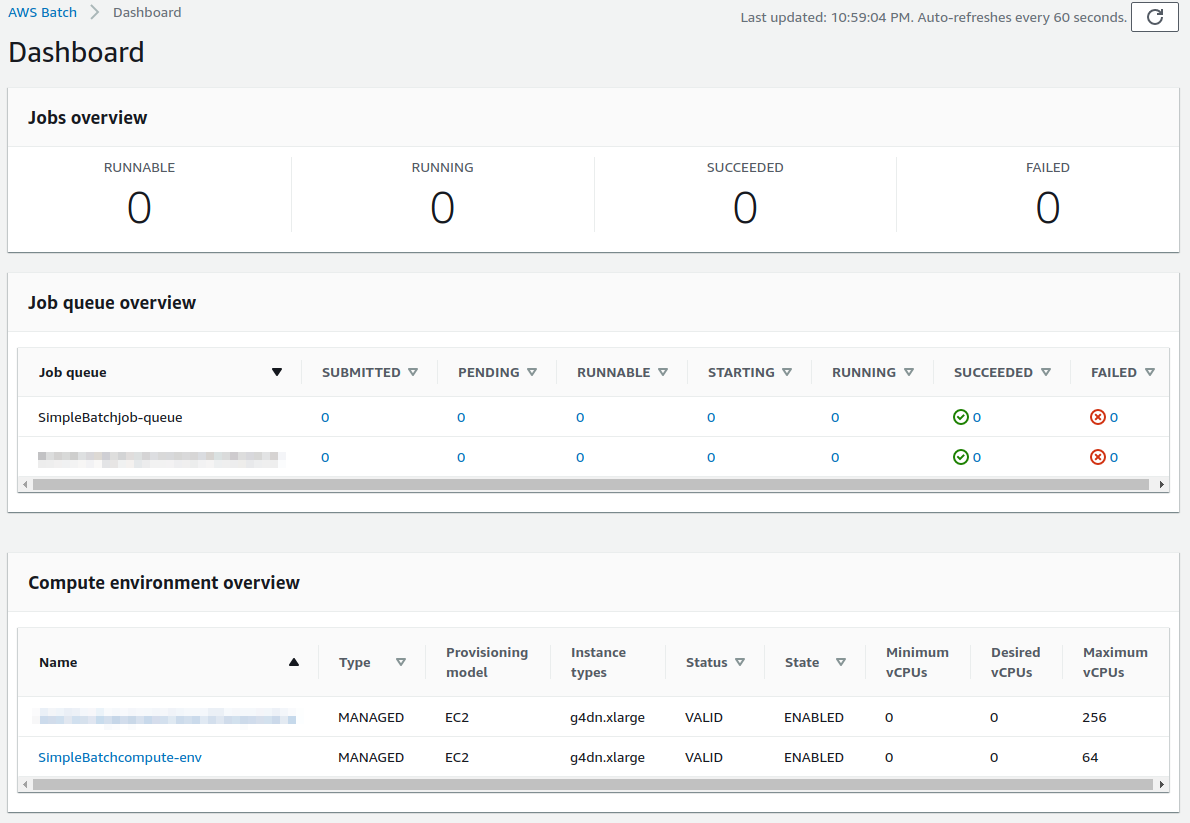

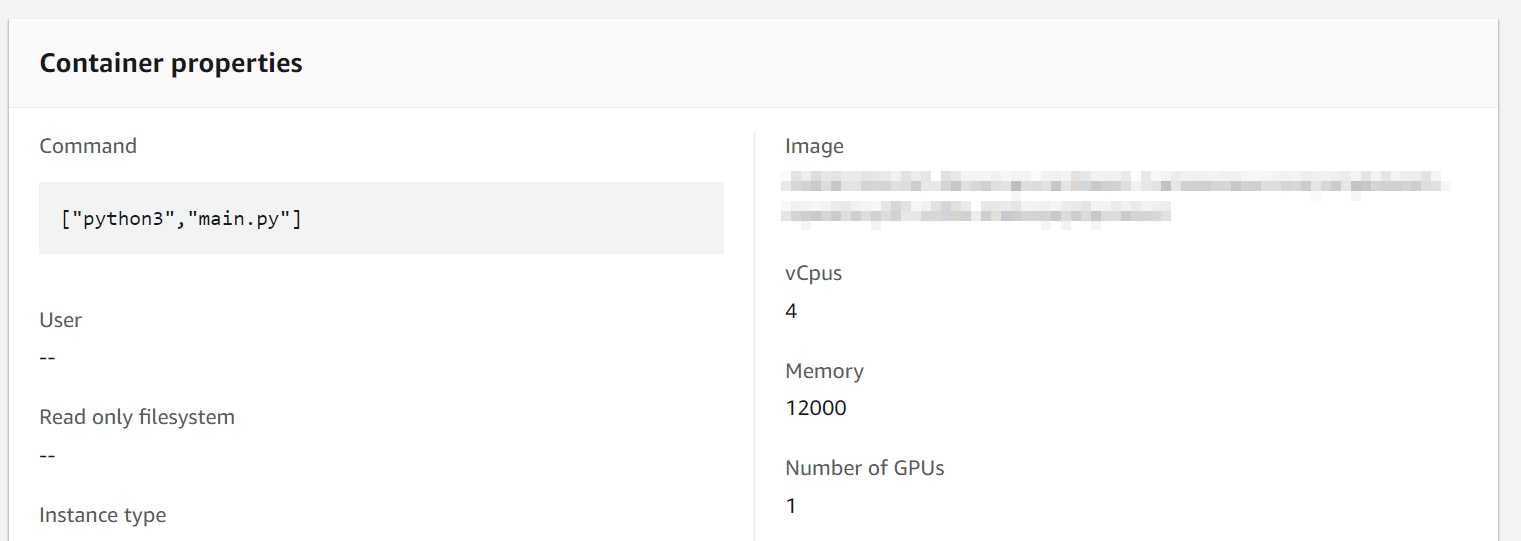

Note the IP address of your instance (the string following InstancePublicIp).